Adaptive design

Overview

Traditional clinical trial design

Clinical trials are experiments conducted to gather evidence of benefits and harms of medical treatments or interventions. Researchers test study treatments in a series of phases, starting with small-scale trials to assess harms and feasibility (e.g., whether it is possible to deliver the treatment). If successful, testing eventually moves to large-scale trials to compare the benefits of the treatment. The success of a treatment at each phase is based on whether it meets set targets that balance its observed benefits and harms. Researchers traditionally conduct clinical trials in distinct phases and this process takes several years before establishing effective and safe treatments for use in clinical practice. The development and testing pathway of study treatments differ depending on the nature of the treatment; for example:

When planning a clinical trial, researchers need to make several choices informed by the information available at the time. Some of these choices are summarised in Table 1.

Researchers need to make several assumptions before the trial begins based on:

- available data - published or unpublished information (e.g. from pilot or feasibility studies 6);

- clinical knowledge and;

- additional expert opinion.

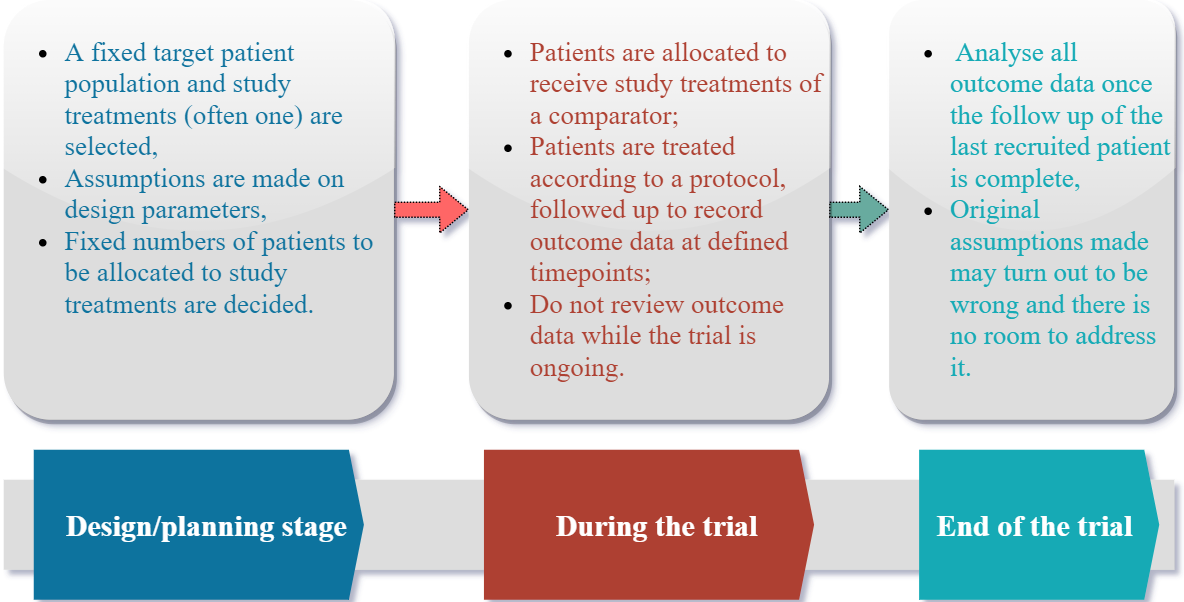

These assumptions may be uncertain and are typically not assessed, and less still corrected, during the trial: the “fixed trial design” (non-adaptive, summarised in Figure 1) does not allow outcome data gathered for some patients already recruited as the trial progresses to be used to amend the future direction of the trial. Instead data assessments are usually limited to recruitment, completeness and safety considerations, with formal analyses undertaken when all outcome data are collected. By this stage, researchers cannot do anything about wrong initial assumptions or guesses made, except to conduct a 'post-mortem'.

References

1. Profil. Trial stages of clinical research. Date accessed, 10 Feb 2019.

2. GSK. Clinical trial phases. Date accessed, 10 Feb 2019

3. Craig et al. Developing and evaluating complex interventions: new guidance. 2019.

4. O’Cathain et al. Guidance on how to develop complex interventions to improve health and healthcare. BMJ Open. 2019;9:e029954.

5. McCulloch et al. No surgical innovation without evaluation: the IDEAL recommendations. Lancet. 2009;374:1105–12.

6. Eldridge et al. Defining feasibility and pilot studies in preparation for randomised controlled trials: Development of a conceptual framework. PLoS One.2016;11.

2. GSK. Clinical trial phases. Date accessed, 10 Feb 2019

3. Craig et al. Developing and evaluating complex interventions: new guidance. 2019.

4. O’Cathain et al. Guidance on how to develop complex interventions to improve health and healthcare. BMJ Open. 2019;9:e029954.

5. McCulloch et al. No surgical innovation without evaluation: the IDEAL recommendations. Lancet. 2009;374:1105–12.

6. Eldridge et al. Defining feasibility and pilot studies in preparation for randomised controlled trials: Development of a conceptual framework. PLoS One.2016;11.

Can we do better?

A fixed trial design approach may fail to address the intended research questions because the initial assumptions, guesses or decisions made about the trial were wrong. Most importantly, quick clinical decisions cannot be made; for example, in emergency or pandemic situations such as Ebola or COVID-19 (see 1, 2) since researchers need to wait until data from all patients recruited are gathered even if the study treatment turns out to be ineffective. Thus, in most cases, the trial needs to run for a long duration to collect this data, which is both time-consuming and costly.

In summary, a fixed trial design is not flexible, and often cannot quickly address complex clinical questions that are relevant to patients while balancing the needs of patients (within and outside the trial), the medical and scientific community, and research funders.

In summary, a fixed trial design is not flexible, and often cannot quickly address complex clinical questions that are relevant to patients while balancing the needs of patients (within and outside the trial), the medical and scientific community, and research funders.

What are “design parameters”?

Design parameters quantify what we expect (or hope) to see in our trial and directly affect how we design it. To illustrate the importance of design parameters, the text below is taken from the RATPAC randomised trial of point of care (PoC) testing for cardiac markers in an emergency medicine setting 3. The primary endpoint was successful discharge, defined as a patient being discharged from the emergency department within four hours of arrival and no subsequent cardiac admissions within 30 days.

“Previous data suggested that 50% of the routine care group would be successfully discharged. With 1565 evaluable subjects in each arm the trial would have 80% power to detect a 5% improvement (to 55% of patients successfully discharged) at the two-sided significance level of 5%.”

In this case, these design parameters were:

- 50%: the percentage of patients expected to be successfully discharged within routine care (control arm).

- 55%: the percentage of patients hoped would be successfully discharged using PoC testing (intervention arm). This means PoC testing would increase the chance of successful discharge by an absolute 5%; this is also referred to as the minimal clinically important difference when any increase below 5% would be considered materially irrelevant.

- 5%: the statistical significance level, meaning there is a one-in-twenty (or 5%) risk of us concluding that PoC testing was genuinely better than routine care when, in fact, PoC testing did not affect a patient’s true chance of being discharged. 5% is a conventional choice for statistical significance; this is also known as the false positive rate.

- 80%: the statistical power. If PoC testing and routine care truly work as researchers believe, i.e., PoC testing has a 55% discharge rate, and routine care has a 50% discharge rate, then there is an 80% chance researchers will conclude that PoC testing is better than usual care. Conversely, this means there is a one-in-five (20%) chance that researchers would incorrectly fail to conclude that there is a benefit from PoC testing if it is truly beneficial; this is known as the false negative rate.

Many trials build in an adjustment for withdrawal, loss to follow-up or missing data. Researchers assumed this would be minimal since discharge should be recorded for all participants, so they implicitly assumed 0% missing data.

Therefore, using these design parameters, researchers needed to recruit 1565 participants per arm (3130 patients in total) to be able to detect a treatment difference of an absolute 5%, whilst controlling the false positive and false negative rates at 5% and 20%, respectively.

Why might these design parameters be wrong?

In nearly every case, our design parameters are estimates - or, if you prefer, guesses. These can be wrong for many reasons including:

- failure to do a thorough literature search or obtain prior data;

- using prior literature and/or data from a different population, in a different setting, or on a different version/dose of the intervention;

- chance.

In the RATPAC trial, our design parameters were very wrong. We had assumed half the patients receiving routine care would be successfully discharged: the actual proportion was around 13% (see Figure 1, sample size re-estimation). For PoC testing the figure was 32%, meaning we increased the percentage of successfully discharged patients by an absolute 19% - far greater than the 5% we had proposed (see group sequential design).

What happens if our design parameters are wrong?

As always, it depends. The RATPAC trial recruited more participants than it needed for the primary outcome - the trial could have stopped earlier, saved money, and concluded that PoC testing is effective sooner (see 4, 5 and group sequential design section on discussion on some of the methods that could have been used to do so). It is, however, more common for trials to overestimate their treatment effect and thereby underestimate their sample size. This leads to a reduction in power to detect the minimal clinically meaningful difference. This reduction in power inevitably may leads to a greater number of ambiguous trials in which the intervention has neither proved nor disproved efficacious. Irrespective of whether the sample size is overestimated or underestimated, however, trials can be inefficient.

Do adaptive designs help make my design parameters right?

First and foremost, adaptive designs cannot solve all problems that arise from poor planning of a clinical trial. Trial design should incorporate as much reliable information as possible. The most obvious example is when the sample size is grossly underestimated; an adaptation which doubles the required sample size may be too hard to implement.

This notwithstanding, adaptive designs can certainly help. RATPAC trial did not need to recruit for as long as it did; the PoC testing intervention had shown enough value early on in the trial (see RATPAC retrospective case study). In other cases, an optimistic design parameter can be corrected but not always.

Consider the trial below, which evaluated the diaphragmatic pacing system (DPS) for people with amyotrophic lateral sclerosis 6.

“The trial was powered to detect a 12 month survival improvement from 45% to 70%, corresponding to a hazard ratio (HR) of 0.45. With a schedule of 18 months' recruitment, 12 months' follow-up, and control group survival of 20% at 24 months and 10% at 30 months, 108 patients (54 per group) were needed to record the 64 events required by the log-rank test, with 85% power, a two-sided type I error of 5%, and 10% additional dropout.”

The hazard ratio (0.45) was optimistic, but with good reason. DPS is a relatively expensive device that, at the time of the trial, cost around £15,000, plus additional overheads to cover an initial surgical procedure and ongoing maintenance. The funding body (the UK National Institute for Health Research) funds trials that add value for money in research addressing whether study treatments are clinically effective and cost-effective when delivered in practice. DPS needed to achieve survival benefits of this magnitude in order to convince the healthcare provider (the UK National Health Service, NHS) of its worth. Therefore, the investigators did not plan this as an adaptive design and the main reason for not incorporating trial adaptations was more practical as adaptive designs need sufficient data to base decisions on. A median survival of 11 months in the control arm, together with a 12 month recruitment phase, meant there was too little outcome data (i.e. recorded deaths) available before the trial completed its recruitment. Furthermore, in hindsight, it would have been inefficient to plan for a sample size re-estimation on its own as the observed treatment effect was smaller than the expected effect size. It seems logical to allow an increase in sample size only when the design parameters were incorrect but the interim results are promising as defined by some prespecified criterion.

In context

Researchers may naturally be concerned that their design assumptions are incorrect; this can quickly become clear once the trial has started. Many trials overestimate or underestimate the required number of patients 7, 8. Across disease areas, only a very small proportion of treatments in phase 1 receive regulatory approval following phase 3 trials 9, 10, 11, 12, with only around 50% of phase 2 trials meeting milestones (i.e. promising safety and efficacy) to progress to phase 3 trials. This highlights some problems with the way trials are traditionally conducted (see 13) and some of these studies could have been planned to use accumulating data to enable more dynamic decision-making. The same applies to later phase trials; less than 20% of treatment comparisons in NIHR Health Technology Assessment funded phase 3 trials produced clinically and statistically significant results in favour of study treatments to change practice 14. In reality, most research resources and time are spent studying treatments for several years that would not translate into practice because they are ineffective or unsafe. Most randomised trials (~80%) 15 evaluate only one study treatment against a comparator even when multiple competing treatment options are available.

All these issues motivate the need to change the way researchers design and conduct trials towards adaptive designs to use the information they are learning from accruing trial data to address the limitations of fixed trial designs.

References

1. Stallard et al. Efficient adaptive designs for clinical trials of interventions for COVID-19. Stat Biopharm Res. 2020;0(0)1-15

2. Brueckner et al. Performance of different clinical trial designs to evaluate treatments during an epidemic. PLoS One. 2018;13(9).

3. Goodacre et al. The Randomised Assessment of Treatment using Panel Assay of Cardiac Markers (RATPAC) trial: a randomised controlled trial of point-of-care cardiac markers in the emergency department. Heart. 2011;97(3):190–6.

4. Sutton et al. Influence of adaptive analysis on unnecessary patient recruitment: reanalysis of the RATPAC trial. Ann Emerg Med. 2012;60(4):442-8.

5. Dimairo. The utility of adaptive designs in publicly funded confirmatory trials. University of Sheffield. 2016.

6. McDermott et al. Safety and efficacy of diaphragm pacing in patients with respiratory insufficiency due to amyotrophic lateral sclerosis (DiPALS): A multicentre, open-label, randomised controlled trial. Lancet Neurol. 2015;14(9):883–92.

7. Clark et al. Sample size determinations in original research protocols for randomised clinical trials submitted to UK research ethics committees: review. BMJ. 2013;346(mar21_1):f1135.

2. Brueckner et al. Performance of different clinical trial designs to evaluate treatments during an epidemic. PLoS One. 2018;13(9).

3. Goodacre et al. The Randomised Assessment of Treatment using Panel Assay of Cardiac Markers (RATPAC) trial: a randomised controlled trial of point-of-care cardiac markers in the emergency department. Heart. 2011;97(3):190–6.

4. Sutton et al. Influence of adaptive analysis on unnecessary patient recruitment: reanalysis of the RATPAC trial. Ann Emerg Med. 2012;60(4):442-8.

5. Dimairo. The utility of adaptive designs in publicly funded confirmatory trials. University of Sheffield. 2016.

6. McDermott et al. Safety and efficacy of diaphragm pacing in patients with respiratory insufficiency due to amyotrophic lateral sclerosis (DiPALS): A multicentre, open-label, randomised controlled trial. Lancet Neurol. 2015;14(9):883–92.

7. Clark et al. Sample size determinations in original research protocols for randomised clinical trials submitted to UK research ethics committees: review. BMJ. 2013;346(mar21_1):f1135.

8. Vickers. Underpowering in randomized trials reporting a sample size calculation. J Clin Epidemiol. 2003;56(8):717–20.

9. Smietana et al. Trends in clinical success rates. Nat Rev Drug Discov. 2016;15(6):379–80.

10. Wong et al. Estimation of clinical trial success rates and related parameters. Biostatistics. 2019;20(2):273–286.

11. BIO. Clinical development success rates 2006-2015. 2016.

12. Hussain et al. Clinical trial success rates of anti-obesity agents: the importance of combination therapies. Obes Rev. 2015;16(9):707–14.

13. Grignolo et al. Phase III trial failures : Costly, but preventable. Appl Clin Trials. 2016;25(8).

14. Dent et al. Treatment success in pragmatic randomised controlled trials: a review of trials funded by the UK Health Technology Assessment programme. Trials. 2011;12(1):109.

9. Smietana et al. Trends in clinical success rates. Nat Rev Drug Discov. 2016;15(6):379–80.

10. Wong et al. Estimation of clinical trial success rates and related parameters. Biostatistics. 2019;20(2):273–286.

11. BIO. Clinical development success rates 2006-2015. 2016.

12. Hussain et al. Clinical trial success rates of anti-obesity agents: the importance of combination therapies. Obes Rev. 2015;16(9):707–14.

13. Grignolo et al. Phase III trial failures : Costly, but preventable. Appl Clin Trials. 2016;25(8).

14. Dent et al. Treatment success in pragmatic randomised controlled trials: a review of trials funded by the UK Health Technology Assessment programme. Trials. 2011;12(1):109.

15. Juszczak et al. Reporting of multi-arm parallel-group randomized trials. JAMA. 2019;321(16):1610.

What is an adaptive design?

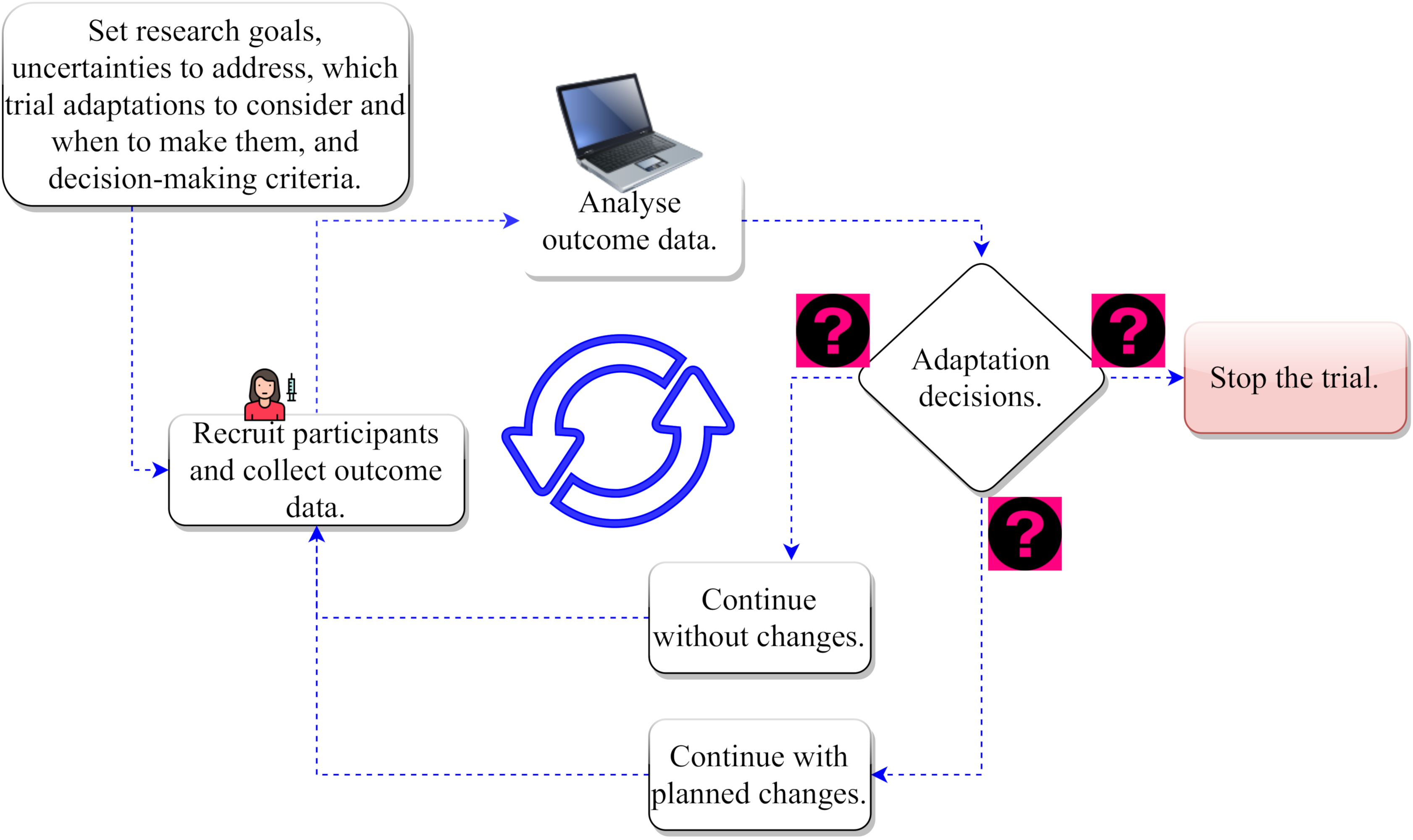

In an adaptive clinical trial design (see Figure 2), researchers start from the same place by making assumptions on design parameters. However, they cast their net wider by acknowledging uncertainties around these assumptions. This allows them to have flexibility to make necessary changes to parts of the trial once they gain more knowledge over the course of the study. These changes are informed by what they are learning from outcome data or information gathered from a group of patients already recruited as the trial progresses (interim data).

Thus, there are potentially multiple paths the trial can take depending on what the emerging data are telling them. Similar to driving a car with your eyes open 1 using real-time travel information available to you (e.g., road signs and satellite navigation system) – you stop at a red traffic light, take the quickest route to reach your destination, change the route when there is a diversion on the current route, or abandon your journey when all routes are blocked.

Thus, there are potentially multiple paths the trial can take depending on what the emerging data are telling them. Similar to driving a car with your eyes open 1 using real-time travel information available to you (e.g., road signs and satellite navigation system) – you stop at a red traffic light, take the quickest route to reach your destination, change the route when there is a diversion on the current route, or abandon your journey when all routes are blocked.

It is important to note that researchers do not make things up as they go along! Whilst flexibility has advantages, unplanned changes impact on both the validity of statistical inference and the practicalities of doing the trial and may undermine the credibility of the results. The type of changes (trial adaptations), and the criteria for making them need to be considered in advance. Changing a trial part-way through needs thought. More specifically, the criteria and timing of possible adaptations are decided at the design stage and specified in trial documents (e.g., the trial protocol and statistical analysis plans). Researchers should not compromise the scientific rigour in running the trial to produce reliable and valid results to influence practice.

In summary, the overall goal of an adaptive design is to address research questions that are relevant to clinical practice quickly and efficiently while balancing ethical and scientific interests.

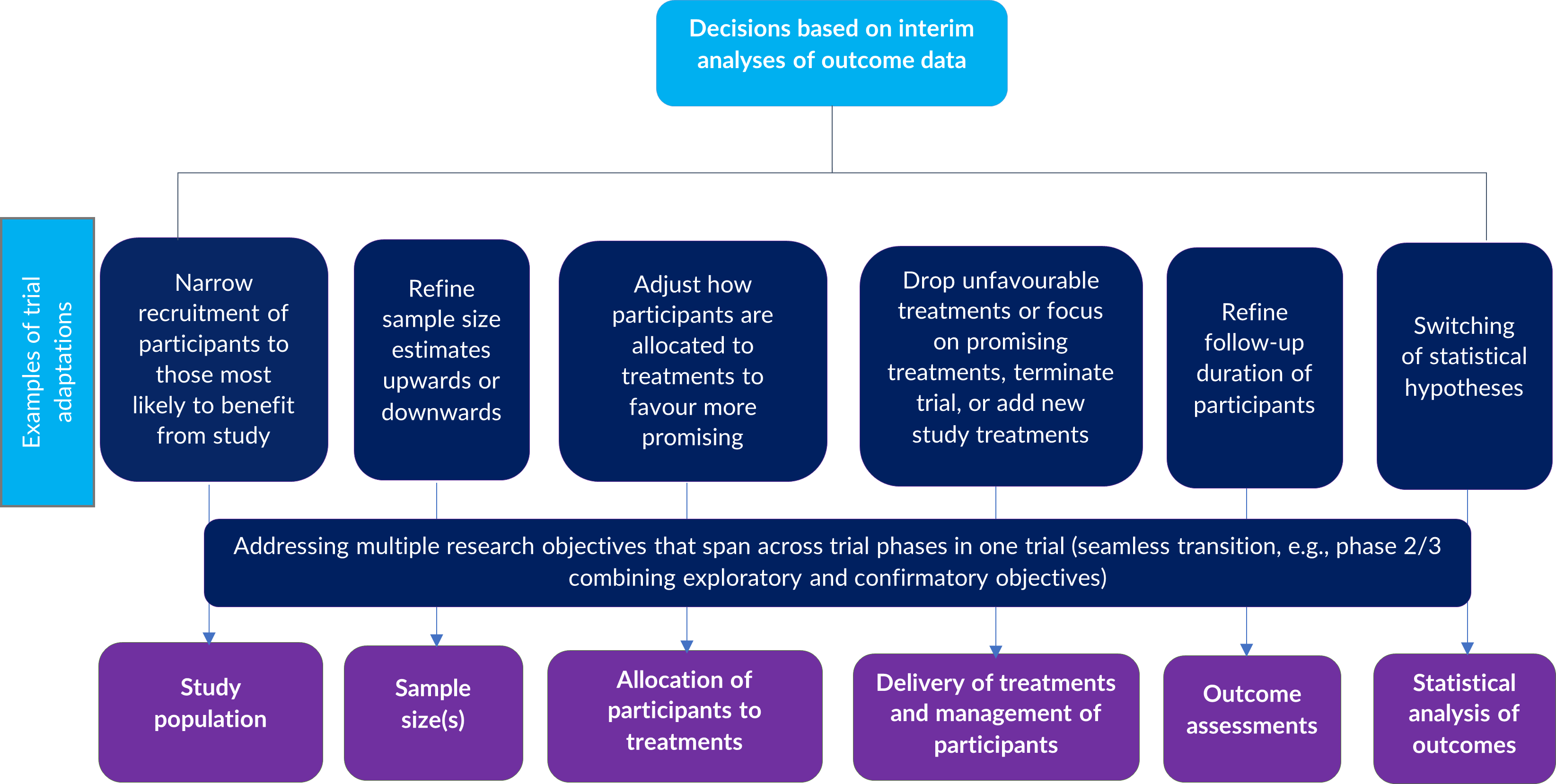

PANDA users may wish to learn more about adaptive designs, the scope and goals of possible adaptations that can be made during the trial, and the motivations behind them (see 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22). Some possible adaptations in randomised trials include:

- changing how many patients should be recruited to the trial (sample size re-estimation);

- stopping the trial once sufficient evidence is gathered to make reliable conclusions 21 (e.g., study treatment shown to be effective or ineffective given what has been observed so far) (see group sequential design for an example );

- closing study treatment options (e.g., different doses or schedules of the same treatment, different treatment combinations, or completely different treatment options) that are shown to be ineffective or unsafe to further recruitment (see multi-arm multi-stage design). New or emerging treatment options can be added;

- seamlessly moving from early- to late-phase trials and addressing multiple research questions in one trial under one protocol. For example, a phase 2/3 trial may combine learning objectives from phase 2 (e.g., to assess early activity or efficacy signals and safety to select promising treatments for further testing) with confirmatory objectives as in phase 3 (see multi-arm multi-stage design );

- changing the eligibility criteria for patients to be enrolled in the trial to include patients who, in light of emerging evidence, have specific characteristics that may be associated with improved response to treatments (see adaptive population enrichment design);

- changing the probability that new patients are allocated to study treatments (e.g., patients may be more likely to be allocated to better-performing treatments) (see response adaptive randomisation design);

- a combination of multiple adaptations such as stated above in a single trial.

Figure 3 summarises examples of common modifications that can be made to trial design aspects at different stages of the trial to address specific uncertainties or to achieve certain objectives or goals.

PANDA users should be aware that the appropriateness of an adaptive design with specific adaptations is primarily dictated by the research question(s) being considered, the logistical constraints likely to be present, and what the researchers want to achieve. The adaptive design should be feasible to implement and its potential value should be assessed at the design stage. Not all research questions can be addressed by using an adaptive design.

One main objective of PANDA is to provide guidance on when to choose an adaptive design, and if so, which one, as well as to familiarise users on considerations when running them.

One main objective of PANDA is to provide guidance on when to choose an adaptive design, and if so, which one, as well as to familiarise users on considerations when running them.

References

1. Berry Consultants. https://www.berryconsultants.com/adaptive-designs/. Date accessed: 19/10/2020

2. Lewis. The pragmatic clinical trial in a learning health care system. Clin Trials. 2016;13(5):484–92.

3. Pallmann et al. Adaptive designs in clinical trials: why use them, and how to run and report them. BMC Med. 2018;16(1):29.

4. Zang et al. Adaptive clinical trial designs in oncology. Chinese Clin Oncol. 2014;3(4):49.

5. Bhatt et al. Adaptive designs for clinical trials. N Engl J Med. 2016;375(1).

6. Kairalla et al. Adaptive designs for comparative effectiveness research trials. Clin Res Regul Aff. 2015;32(1):36–44.

7. Kairalla et al. Adaptive trial designs: a review of barriers and opportunities. Trials. 2012;13(1):145.

8. Chow et al. Adaptive design methods in clinical trials - a review. Orphanet J Rare Dis. 2008;3:11.

9. Cirulli et al. Adaptive trial design: Its growing role in clinical research and implications for pharmacists. Am J Heal Pharm. 2011;68(9):807–13.

10. Mahajan et al. Adaptive design clinical trials: methodology, challenges and prospect. Indian J Pharmacol. 2010;42(4):201–7.

11. Rong. Regulations on adaptive design clinical trials. Pharm Regul Aff Open Access. 2014;03(01).

2. Lewis. The pragmatic clinical trial in a learning health care system. Clin Trials. 2016;13(5):484–92.

3. Pallmann et al. Adaptive designs in clinical trials: why use them, and how to run and report them. BMC Med. 2018;16(1):29.

4. Zang et al. Adaptive clinical trial designs in oncology. Chinese Clin Oncol. 2014;3(4):49.

5. Bhatt et al. Adaptive designs for clinical trials. N Engl J Med. 2016;375(1).

6. Kairalla et al. Adaptive designs for comparative effectiveness research trials. Clin Res Regul Aff. 2015;32(1):36–44.

7. Kairalla et al. Adaptive trial designs: a review of barriers and opportunities. Trials. 2012;13(1):145.

8. Chow et al. Adaptive design methods in clinical trials - a review. Orphanet J Rare Dis. 2008;3:11.

9. Cirulli et al. Adaptive trial design: Its growing role in clinical research and implications for pharmacists. Am J Heal Pharm. 2011;68(9):807–13.

10. Mahajan et al. Adaptive design clinical trials: methodology, challenges and prospect. Indian J Pharmacol. 2010;42(4):201–7.

11. Rong. Regulations on adaptive design clinical trials. Pharm Regul Aff Open Access. 2014;03(01).

12. Thorlund et al. Key design considerations for adaptive clinical trials: A primer for clinicians. BMJ. 2018;p. k698.

13. Brown et al. Adaptive designs for randomized trials in public health. Annu Rev Public Health. 2009;30(1):1–25.

14. Wang et al. Adaptive design clinical trials and trial logistics models in CNS drug development. European Neuropsychopharmacology. 2011;21:159–66.

15. Park et al. Critical concepts in adaptive clinical trials. Clin Epidemiol. 2018;10:343–51.

16. Stallard et al. Efficient adaptive designs for clinical trials of interventions for COVID-19. Stat Biopharm Res. 2020;1–26.

17. Burnett et al. Adding flexibility to clinical trial designs: an example-based guide to the practical use of adaptive designs. BMC Med. 2020;18(1):352.

18. Mandrola et al. Adaptive trials in cardiology: Some considerations and examples. Can J Cardiol. 2021;37(9):1428–37.

19. Lauffenburger et al. Designing and conducting adaptive trials to evaluate interventions in health services and implementation research: practical considerations. BMJ Med. 2022;1(1):158.

20. Crawford et al. Adaptive clinical trials in stroke. Stroke. 2024.

21. Ciolino et al. Guidance on interim analysis methods in clinical trials. J Clin Transl Sci. 2023.

22. Kaizer et al. Recent innovations in adaptive trial designs: A review of design opportunities in translational research. J Clin Transl Sci. 2023;7

13. Brown et al. Adaptive designs for randomized trials in public health. Annu Rev Public Health. 2009;30(1):1–25.

14. Wang et al. Adaptive design clinical trials and trial logistics models in CNS drug development. European Neuropsychopharmacology. 2011;21:159–66.

15. Park et al. Critical concepts in adaptive clinical trials. Clin Epidemiol. 2018;10:343–51.

16. Stallard et al. Efficient adaptive designs for clinical trials of interventions for COVID-19. Stat Biopharm Res. 2020;1–26.

17. Burnett et al. Adding flexibility to clinical trial designs: an example-based guide to the practical use of adaptive designs. BMC Med. 2020;18(1):352.

18. Mandrola et al. Adaptive trials in cardiology: Some considerations and examples. Can J Cardiol. 2021;37(9):1428–37.

19. Lauffenburger et al. Designing and conducting adaptive trials to evaluate interventions in health services and implementation research: practical considerations. BMJ Med. 2022;1(1):158.

20. Crawford et al. Adaptive clinical trials in stroke. Stroke. 2024.

21. Ciolino et al. Guidance on interim analysis methods in clinical trials. J Clin Transl Sci. 2023.

22. Kaizer et al. Recent innovations in adaptive trial designs: A review of design opportunities in translational research. J Clin Transl Sci. 2023;7

How often are adaptive designs used in randomised trials?

The idea of changing trial design aspects of a clinical trial using accruing study data is not new, but it took many years for researchers, regulators and funders to be receptive to the concept. The scope of trial adaptations has increased over time as more statistical methods have been developed to address emerging research needs. The use of adaptive designs in randomised trials is steadily increasing, although it is a relatively small fraction of trials.

PANDA users may wish to read more on the types of these adaptive designs and scope of adaptations used in practice 1, 2, 3, 4, 5, 6, 7, 8, 9,10, 11, 12, 13, 14, 15, 16, 17, 24, 25, 26, 27, 29. These adaptive designs can be applied across disease areas, though the opportunities to use them in certain clinical areas (e.g., emergency medicine 18 and oncology) may be greater than others. Several researchers have studied reasons why the uptake of adaptive designs is very low despite the availability of an ever-growing body of literature on related statistical methods 1,2,3, 10,11, 19, 20, 21, 22, 23.

PANDA users may wish to read more on the types of these adaptive designs and scope of adaptations used in practice 1, 2, 3, 4, 5, 6, 7, 8, 9,10, 11, 12, 13, 14, 15, 16, 17, 24, 25, 26, 27, 29. These adaptive designs can be applied across disease areas, though the opportunities to use them in certain clinical areas (e.g., emergency medicine 18 and oncology) may be greater than others. Several researchers have studied reasons why the uptake of adaptive designs is very low despite the availability of an ever-growing body of literature on related statistical methods 1,2,3, 10,11, 19, 20, 21, 22, 23.

A notable barrier mentioned by many researchers is the lack of knowledge of adaptive designs and practical knowledge on how to apply them. PANDA is aimed to help bridge this practical knowledge gap by educating multidisciplinary researchers in clinical trials. PANDA users may wish to read these easy-to-read tutorial papers on the concepts of adaptive designs including real-life case studies.

References

1. Dimairo et al. Cross-sector surveys assessing perceptions of key stakeholders towards barriers, concerns and facilitators to the appropriate use of adaptive designs in confirmatory trials. Trials. 2015;16:585.

2. Morgan et al. Adaptive design: Results of 2012 survey on perception and use. Ther Innov Regul Sci. 2014;48:473–81.

3. Hatfield et al. Adaptive designs undertaken in clinical research: a review of registered clinical trials. Trials. 2016;17:150.

4. Sato et al. Practical characteristics of adaptive design in phase 2 and 3 clinical trials. J Clin Pharm Ther. 2017;1–11.

5. Bothwell et al. Adaptive design clinical trials: a review of the literature and ClinicalTrials.gov. BMJ Open. 2018;8

6. Wang et al. Evaluation of the extent of adaptation to sample size in clinical trials for cardiovascular and CNS diseases. Contemp Clin Trials. 2018;67:31–6.

7. Gosho et al. Trends in study design and the statistical methods employed in a leading general medicine journal. J Clin Pharm Ther. 2018;43:36–44.

8. Collignon et al. Adaptive designs in clinical trials: from scientific advice to marketing authorisation to the European Medicine Agency. Trials. 2018;19:642.

9. Cerqueira et al. Adaptive design: a review of the technical, statistical, and regulatory aspects of implementation in a clinical trial. Ther Innov Regul Sci . 2020;54(1):246-258 .

10. Quinlan et al. Barriers and opportunities for implementation of adaptive designs in pharmaceutical product development. Clin Trials. 2010;7:167–73.

11. Hartford et al. Adaptive designs: results of 2016 survey on perception and use. Ther Innov Regul Sci. 2020. 54(1);42-54

12. Bauer et al. Application of adaptive designs – a review. Biometrical J. 2006;48:493–506.

13. Elsäßer et al. Adaptive clinical trial designs for European marketing authorization: a survey of scientific advice letters from the European Medicines Agency. Trials. 2014;15:383.

14. Lin et al. CBER’s experience with adaptive design clinical trials. Ther Innov Regul Sci. 2015;50:195–203.

15. Yang et al. Adaptive design practice at the Center for Devices and Radiological Health (CDRH), January 2007 to May 2013. Ther Innov Regul Sci. 2016;50:710–7.

16. Stevely et al. An Investigation of the shortcomings of the CONSORT 2010 statement for the reporting of group sequential randomised controlled trials: A methodological systematic review. PLoS One. 2015;10:e0141104.

17. Mistry et al. A literature review of applied adaptive design methodology within the field of oncology in randomised controlled trials and a proposed extension to the CONSORT guidelines. BMC Med Res Methodol. 2017;17:108.

18. Flight et al. Can emergency medicine research benefit from adaptive design clinical trials? Emerg Med J. 2016;emermed-2016-206046.

19. Kairalla et al. Adaptive trial designs: a review of barriers and opportunities. Trials. 2012;13:145.

20. Dimairo et al. Missing steps in a staircase: a qualitative study of the perspectives of key stakeholders on the use of adaptive designs in confirmatory trials. Trials. 2015;16:430.

21. Meurer et al. Attitudes and opinions regarding confirmatory adaptive clinical trials: a mixed methods analysis from the Adaptive Designs Accelerating Promising Trials into Treatments (ADAPT-IT) project. Trials. 2016;17:373.

22. Coffey et al. Overview, hurdles, and future work in adaptive designs: perspectives from a National Institutes of Health-funded workshop. Clin Trials. 2012;9:671–80.

23. Coffey et al. Adaptive clinical trials: progress and challenges. Drugs R D. 2008;9:229–42.

24. Zhang et al. A systematic review of randomised controlled trials with adaptive and traditional group sequential designs – applications in cardiovascular clinical trials. BMC Med Res Methodol. 2023;23:1–9

25. Lee et al. Adaptive design clinical trials: current status by disease and trial phase in various perspectives. Transl Clin Pharmacol. 2023;31(4):202–16.

26. Huang et al. The characteristics and regulations of adaptive designs from 2008 to 2020: An overview of European Medicines Agency approvals. Int J Clin Pharmacol Ther. 2023;61(10):445–54.

27. Wang et al. A systematic survey of adaptive trials shows substantial improvement in methods is needed. J Clin Epidemiol. 2024;167.

28. Gilholm et al. Adaptive clinical trials in pediatric critical care: A systematic review. Pediatr Crit Care Med. 2023;24.

29. Ben-Eltriki et al. Adaptive designs in clinical trials: a systematic review-part I. BMC Med Res Methodol. 2024;24(1):229

2. Morgan et al. Adaptive design: Results of 2012 survey on perception and use. Ther Innov Regul Sci. 2014;48:473–81.

3. Hatfield et al. Adaptive designs undertaken in clinical research: a review of registered clinical trials. Trials. 2016;17:150.

4. Sato et al. Practical characteristics of adaptive design in phase 2 and 3 clinical trials. J Clin Pharm Ther. 2017;1–11.

5. Bothwell et al. Adaptive design clinical trials: a review of the literature and ClinicalTrials.gov. BMJ Open. 2018;8

6. Wang et al. Evaluation of the extent of adaptation to sample size in clinical trials for cardiovascular and CNS diseases. Contemp Clin Trials. 2018;67:31–6.

7. Gosho et al. Trends in study design and the statistical methods employed in a leading general medicine journal. J Clin Pharm Ther. 2018;43:36–44.

8. Collignon et al. Adaptive designs in clinical trials: from scientific advice to marketing authorisation to the European Medicine Agency. Trials. 2018;19:642.

9. Cerqueira et al. Adaptive design: a review of the technical, statistical, and regulatory aspects of implementation in a clinical trial. Ther Innov Regul Sci . 2020;54(1):246-258 .

10. Quinlan et al. Barriers and opportunities for implementation of adaptive designs in pharmaceutical product development. Clin Trials. 2010;7:167–73.

11. Hartford et al. Adaptive designs: results of 2016 survey on perception and use. Ther Innov Regul Sci. 2020. 54(1);42-54

12. Bauer et al. Application of adaptive designs – a review. Biometrical J. 2006;48:493–506.

13. Elsäßer et al. Adaptive clinical trial designs for European marketing authorization: a survey of scientific advice letters from the European Medicines Agency. Trials. 2014;15:383.

14. Lin et al. CBER’s experience with adaptive design clinical trials. Ther Innov Regul Sci. 2015;50:195–203.

15. Yang et al. Adaptive design practice at the Center for Devices and Radiological Health (CDRH), January 2007 to May 2013. Ther Innov Regul Sci. 2016;50:710–7.

16. Stevely et al. An Investigation of the shortcomings of the CONSORT 2010 statement for the reporting of group sequential randomised controlled trials: A methodological systematic review. PLoS One. 2015;10:e0141104.

17. Mistry et al. A literature review of applied adaptive design methodology within the field of oncology in randomised controlled trials and a proposed extension to the CONSORT guidelines. BMC Med Res Methodol. 2017;17:108.

18. Flight et al. Can emergency medicine research benefit from adaptive design clinical trials? Emerg Med J. 2016;emermed-2016-206046.

19. Kairalla et al. Adaptive trial designs: a review of barriers and opportunities. Trials. 2012;13:145.

20. Dimairo et al. Missing steps in a staircase: a qualitative study of the perspectives of key stakeholders on the use of adaptive designs in confirmatory trials. Trials. 2015;16:430.

21. Meurer et al. Attitudes and opinions regarding confirmatory adaptive clinical trials: a mixed methods analysis from the Adaptive Designs Accelerating Promising Trials into Treatments (ADAPT-IT) project. Trials. 2016;17:373.

22. Coffey et al. Overview, hurdles, and future work in adaptive designs: perspectives from a National Institutes of Health-funded workshop. Clin Trials. 2012;9:671–80.

23. Coffey et al. Adaptive clinical trials: progress and challenges. Drugs R D. 2008;9:229–42.

24. Zhang et al. A systematic review of randomised controlled trials with adaptive and traditional group sequential designs – applications in cardiovascular clinical trials. BMC Med Res Methodol. 2023;23:1–9

25. Lee et al. Adaptive design clinical trials: current status by disease and trial phase in various perspectives. Transl Clin Pharmacol. 2023;31(4):202–16.

26. Huang et al. The characteristics and regulations of adaptive designs from 2008 to 2020: An overview of European Medicines Agency approvals. Int J Clin Pharmacol Ther. 2023;61(10):445–54.

27. Wang et al. A systematic survey of adaptive trials shows substantial improvement in methods is needed. J Clin Epidemiol. 2024;167.

28. Gilholm et al. Adaptive clinical trials in pediatric critical care: A systematic review. Pediatr Crit Care Med. 2023;24.

29. Ben-Eltriki et al. Adaptive designs in clinical trials: a systematic review-part I. BMC Med Res Methodol. 2024;24(1):229

Opportunities and considerations for adaptive designs in different research areas

In theory, adaptive designs can be used in trials research across trial phases and different disease areas or indications; however, the opportunities to use them may be greater in certain areas than others for various reasons. PANDA users may wish to read about the use of adaptive trials in different research contexts with examples.

References

1. Lauffenburger et al. Designing and conducting adaptive trials to evaluate interventions in health services and implementation research: practical considerations. BMJ Med. 2022;1(1):158.

2. Mandrola et al. Adaptive trials in cardiology: Some considerations and examples. Can J Cardiol. 2021;37(9):1428–37.

3. Mukherjee et al. Adaptive designs: Benefits and cautions for neurosurgery trials. World Neurosurg. 2022;161:316–22.

4. Flight et al. Can emergency medicine research benefit from adaptive design clinical trials? Emerg Med J. 2017;34(4):243-248

5. Jaki et al. Multi-arm multi-stage trials can improve the efficiency of finding effective treatments for stroke : a case study and review of the literature. BMC Cardiovasc Disord. 2018;18(1):215.

6. Stallard et al. Efficient adaptive designs for clinical trials of interventions for COVID-19. Stat Biopharm Res. 2020;1–26.

7. Parsons et al. Group sequential designs in pragmatic trials: feasibility and assessment of utility using data from a number of recent surgical RCTs. BMC Med Res Methodol. 2022;22:1–18.

8. Crawford et al. Adaptive clinical trials in stroke. Stroke. 2024.

9. Zhang et al. A systematic review of randomised controlled trials with adaptive and traditional group sequential designs – applications in cardiovascular clinical trials. BMC Med Res Methodol. 2023;23:1–9

10. Hayward et al. Adaptive trials in stroke: Current use and future directions. Neurology. 2024;103.

11. Lee et al. Adaptive design clinical trials: current status by disease and trial phase in various perspectives. Transl Clin Pharmacol. 2023;31(4):202–16.

12. Jaki et al. Multi-arm multi-stage trials can improve the efficiency of finding effective treatments for stroke : a case study and review of the literature. BMC Cardiovasc Disord. 2018;18(1):215.

2. Mandrola et al. Adaptive trials in cardiology: Some considerations and examples. Can J Cardiol. 2021;37(9):1428–37.

3. Mukherjee et al. Adaptive designs: Benefits and cautions for neurosurgery trials. World Neurosurg. 2022;161:316–22.

4. Flight et al. Can emergency medicine research benefit from adaptive design clinical trials? Emerg Med J. 2017;34(4):243-248

5. Jaki et al. Multi-arm multi-stage trials can improve the efficiency of finding effective treatments for stroke : a case study and review of the literature. BMC Cardiovasc Disord. 2018;18(1):215.

6. Stallard et al. Efficient adaptive designs for clinical trials of interventions for COVID-19. Stat Biopharm Res. 2020;1–26.

7. Parsons et al. Group sequential designs in pragmatic trials: feasibility and assessment of utility using data from a number of recent surgical RCTs. BMC Med Res Methodol. 2022;22:1–18.

8. Crawford et al. Adaptive clinical trials in stroke. Stroke. 2024.

9. Zhang et al. A systematic review of randomised controlled trials with adaptive and traditional group sequential designs – applications in cardiovascular clinical trials. BMC Med Res Methodol. 2023;23:1–9

10. Hayward et al. Adaptive trials in stroke: Current use and future directions. Neurology. 2024;103.

11. Lee et al. Adaptive design clinical trials: current status by disease and trial phase in various perspectives. Transl Clin Pharmacol. 2023;31(4):202–16.

12. Jaki et al. Multi-arm multi-stage trials can improve the efficiency of finding effective treatments for stroke : a case study and review of the literature. BMC Cardiovasc Disord. 2018;18(1):215.

13. Ryan et al. Bayesian adaptive clinical trial designs for respiratory medicine. Respirology. 2022.

14. Gilholm et al. Adaptive clinical trials in pediatric critical care: A systematic review. Pediatr Crit Care Med. 2023;24.

15. Ben-Eltriki et al. Adaptive designs in clinical trials: a systematic review-part I. BMC Med Res Methodol. 2024;24(1):229.

16. Potvin et al. Adaptive designs in dermatology clinical trials: Current status and future perspectives. J Eur Acad Dermatology Venereol. 2024;38(9):1694–703.

14. Gilholm et al. Adaptive clinical trials in pediatric critical care: A systematic review. Pediatr Crit Care Med. 2023;24.

15. Ben-Eltriki et al. Adaptive designs in clinical trials: a systematic review-part I. BMC Med Res Methodol. 2024;24(1):229.

16. Potvin et al. Adaptive designs in dermatology clinical trials: Current status and future perspectives. J Eur Acad Dermatology Venereol. 2024;38(9):1694–703.