General considerations about adaptive trials

Planning and design

What is the rationale for considering an adaptive design?

At the design stage, researchers should use the most appropriate and feasible design to address the research questions. Adaptive designs do not provide solutions to every research question or problem but can provide benefits when used in the right context. It is not advisable to tailor research questions to suit the adaptive design without a clear scientific rationale, just because it is appealing to certain stakeholders and en-vogue. The need for an adaptive design and the appropriateness of adaptive features should be dictated by the research questions and goals.

An adaptive design should be used when it adds value in addressing research questions compared to alternative trial designs. Thus, researchers need to understand available opportunities offered by adaptive designs and how their value can be assessed (e.g., fewer patients required, shorter expected trial duration, costs saved, higher precision in estimating treatment effects) in a given context as well as their limitations. PANDA users may wish to read these tutorial papers highlighting the research questions that can be addressed or goals that can be achieved by using adaptive designs 1, 2, 3 as well as purpose and reasoning behind interim analyses for early stopping for efficacy, futility, or harm with real-life examples 4.

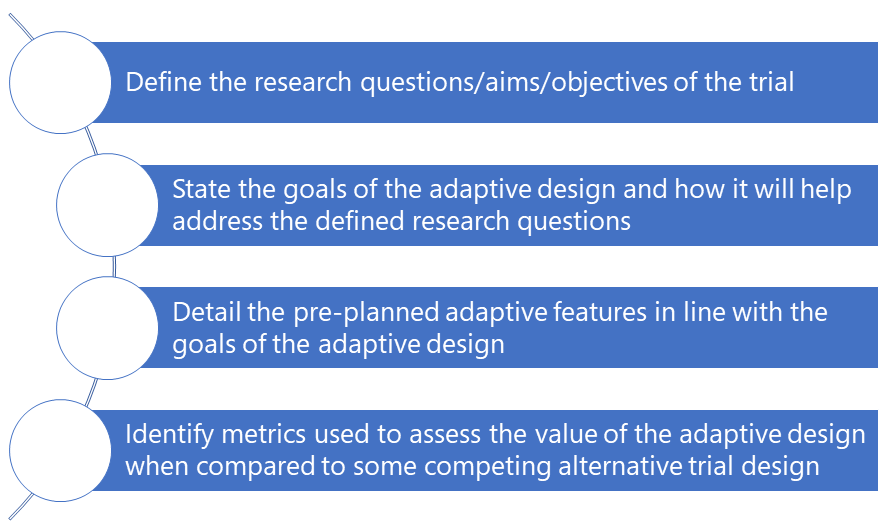

Figure 1 can help the research team in the thought process to decide if an adaptive design is needed.

An adaptive design should be used when it adds value in addressing research questions compared to alternative trial designs. Thus, researchers need to understand available opportunities offered by adaptive designs and how their value can be assessed (e.g., fewer patients required, shorter expected trial duration, costs saved, higher precision in estimating treatment effects) in a given context as well as their limitations. PANDA users may wish to read these tutorial papers highlighting the research questions that can be addressed or goals that can be achieved by using adaptive designs 1, 2, 3 as well as purpose and reasoning behind interim analyses for early stopping for efficacy, futility, or harm with real-life examples 4.

Figure 1 can help the research team in the thought process to decide if an adaptive design is needed.

References

1. Burnett et al. Adding flexibility to clinical trial designs: an example-based guide to the practical use of adaptive designs. BMC Med. 2020;18(1):352.

2. Pallmann et al. Adaptive designs in clinical trials: why use them, and how to run and report them. BMC Med. 2018;16:29.

3. Park et al. Critical concepts in adaptive clinical trials. Clin Epidemiol. 2018;10:343–51.

4. Ciolino et al. Guidance on interim analysis methods in clinical trials. J Clin Transl Sci. 2023;7(1):e124.

2. Pallmann et al. Adaptive designs in clinical trials: why use them, and how to run and report them. BMC Med. 2018;16:29.

3. Park et al. Critical concepts in adaptive clinical trials. Clin Epidemiol. 2018;10:343–51.

4. Ciolino et al. Guidance on interim analysis methods in clinical trials. J Clin Transl Sci. 2023;7(1):e124.

Do not overcomplicate the adaptive features: keep it simple and manageable

An adaptive design is not a tool to avoid good planning of the study. The main goal in every trial is to produce reliable and interpretable results that meet the research objectives. The design of a clinical trial is made up of many components and investigators may have uncertainty in specifying some of these (e.g., the number of treatment arms, dosing levels and regimen, outcomes to measure and their variability). Therefore, it may be tempting for researchers to design their trial with lots of adaptive features to address these uncertainties. However, depending on the nature of these multiple trial adaptations, this may threaten the integrity and validity of trial results if they are not implemented properly. For example, the statistical properties of an adaptive design with lots of adaptive features can be difficult to establish and understand, implementing the adaptive features may be practically complex, and the meaning of the results may be hard to interpret.

It is best to focus on just a few key trial adaptations that will address the most critical uncertainties and are likely to yield worthwhile gains while preserving the credibility and validity of trial results. This is essential in the confirmatory setting although in principle it applies across trial phases. For example, in a scenario where there is very little information available about the variability of the outcome measure at the design stage when the sample size is calculated, it is helpful to include an adaptive sample size recalculation step during the trial. See 1 for further discussions.

It is best to focus on just a few key trial adaptations that will address the most critical uncertainties and are likely to yield worthwhile gains while preserving the credibility and validity of trial results. This is essential in the confirmatory setting although in principle it applies across trial phases. For example, in a scenario where there is very little information available about the variability of the outcome measure at the design stage when the sample size is calculated, it is helpful to include an adaptive sample size recalculation step during the trial. See 1 for further discussions.

References

1. Wason et al. When to keep it simple – adaptive designs are not always useful. BMC Med. 2019;17(1):152.

Implications of adaptations on other research goals/objectives

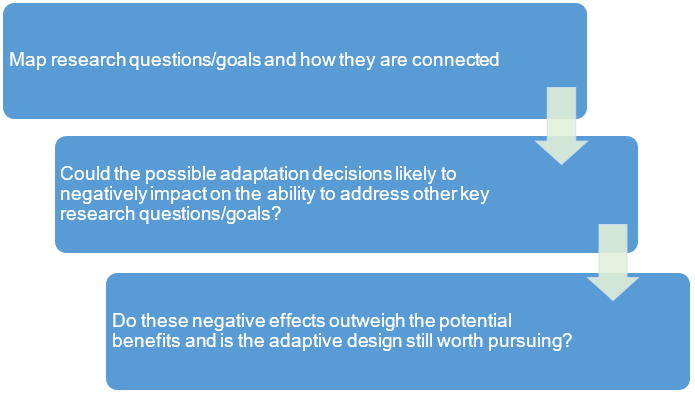

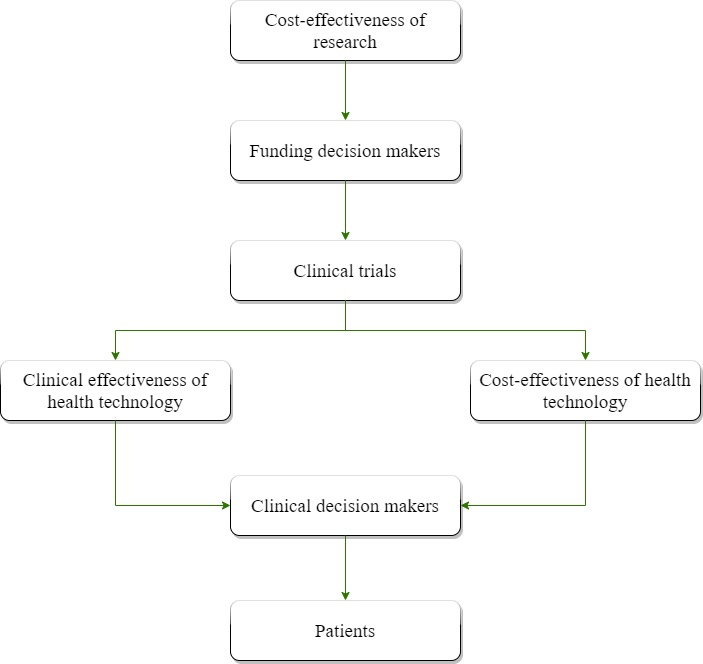

It is common to design trials with multiple research goals/objectives with varying degrees of importance and some of these may be interconnected in a way that will influence the overall interpretation of findings (e.g. clinical and cost-effectiveness) to change practice. Some adaptation decisions (e.g. early stopping for overwhelming clinical benefit) may impact on the ability to address other research goals/questions (e.g., health economic analysis or other secondary objectives, see 1, 2). A thought process like the one illustrated in Figure 2 may be helpful at the planning stage to assess the broader impact of adaptations.

If an adaptive design undermines the ability to address key research questions then its value is questionable. For example, an adaptive design (with an option to stop early for efficacy) with an interim analysis so early that not enough safety data are yet accrued. An alternative would be to put the interim analysis later to make the adaptive design appropriate.

If an adaptive design undermines the ability to address key research questions then its value is questionable. For example, an adaptive design (with an option to stop early for efficacy) with an interim analysis so early that not enough safety data are yet accrued. An alternative would be to put the interim analysis later to make the adaptive design appropriate.

References

1. Wason et al. When to keep it simple – adaptive designs are not always useful. BMC Med. 2019;17(1):152.

2. Flight et al. A review of clinical trials with an adaptive design and health economic analysis. Value Heal. 2019;22(4):391-398

2. Flight et al. A review of clinical trials with an adaptive design and health economic analysis. Value Heal. 2019;22(4):391-398

Adaptation outcomes and their appropriateness

Adaptive designs use interim data to decide whether a trial adaptation should be made. Each possible adaptation may depend on one or more outcome measures. These are known as adaptation outcomes. In some adaptive clinical trials, adaptation outcome(s) (e.g., used for treatment selection) and the primary outcome(s) used later, in the final analysis, can differ or can be a combination of both (e.g., 1, 4, 5). Just like the choice of the primary outcome(s), the choice of adaptation outcome(s) should be justified, focusing on its appropriateness to make robust trial adaptations (e.g., how reliable are the adaptation outcomes at informing the primary outcomes). This may relate to how the adaptation outcome informs the activity of the treatment effect to increase the chances of observing a good clinical response on the primary outcome (e.g., 2). Poor choice of adaptation outcomes threatens the efficiency of the adaptive design to achieve the research goals. For example, at an interim analysis, potentially effective treatments could be erroneously declared futile and dropped, or a certain patient subpopulation erroneously declared as unlikely to benefit from treatment and stopped. Researchers should not select adaptation outcomes that are not scientifically meaningful just to make the adaptive design feasible.

The time point when the adaptation outcomes are assessed is important. For operational feasibility of the adaptive design, quickly observed adaptation outcomes are often used so that we can learn quickly and make trial changes relative to the recruitment rate and treatment duration. If the recruitment to the trial is fast, even with short-term adaptation outcomes, there is only a small window of opportunity to make changes to the trial. For instance, some interim analyses may be redundant when all recruited participants, with the pending adaptation outcome, have already been treated (e.g., 3). In summary:

- identify key outcome measures for making trial adaptations;

- provide justification for choosing adaptation outcomes including when and how they are assessed;

- researchers should not select adaptation outcomes that are not scientifically meaningful just for the sake of making the adaptive design feasible.

References

1. Parsons et al. An adaptive two-arm clinical trial using early endpoints to inform decision making: Design for a study of subacromial spacers for repair of rotator cuff tendon tears. Trials. 2019;20:694.

2. Sydes et al. Issues in applying multi-arm multi-stage methodology to a clinical trial in prostate cancer: the MRC STAMPEDE trial. Trials. 2009;10:39.

3. Boeree et al. High-dose rifampicin, moxifloxacin, and SQ109 for treating tuberculosis: a multi-arm, multi-stage randomised controlled trial. Lancet Infect Dis. 2017;17(1):39-49.

4. Parsons et al. Group sequential designs in pragmatic trials: feasibility and assessment of utility using data from a number of recent surgical RCTs. BMC Med Res Methodol. 2022;22:1–18.

5. Parsons et al. Group sequential designs for pragmatic clinical trials with early outcomes: methods and guidance for planning and implementation. BMC Med Res Methodol. 2024;24(1).

2. Sydes et al. Issues in applying multi-arm multi-stage methodology to a clinical trial in prostate cancer: the MRC STAMPEDE trial. Trials. 2009;10:39.

3. Boeree et al. High-dose rifampicin, moxifloxacin, and SQ109 for treating tuberculosis: a multi-arm, multi-stage randomised controlled trial. Lancet Infect Dis. 2017;17(1):39-49.

4. Parsons et al. Group sequential designs in pragmatic trials: feasibility and assessment of utility using data from a number of recent surgical RCTs. BMC Med Res Methodol. 2022;22:1–18.

5. Parsons et al. Group sequential designs for pragmatic clinical trials with early outcomes: methods and guidance for planning and implementation. BMC Med Res Methodol. 2024;24(1).

Decision-making criteria for trial adaptation, when to conduct interim analyses, and how often

At interim analyses , given the outcome data observed up to that point, decisions are made on what pre-planned changes to make to the trial. The decision-making criteria should stipulate how and when the proposed trial adaptations will be used. This should include the:

- set of actions that will be taken given the interim observed data (e.g., stop recruiting to a treatment arm for futility);

- specified limits or parameters to trigger the adaptations (e.g., stopping boundaries) and;

- level of evidence required (e.g., for claiming a treatment arm is futile).

For example, in a trial of several treatment arms, we may specify that after 50 patients have been randomised to each arm, we will perform an interim analysis and any arm that has no more than 5 responders will be closed due to futility; this is based on ensuring that the chance of dropping a treatment arm with a true response probability of 20% or higher is no more than 5%.

The timing and frequency of interim analyses and decision-making criteria influence the statistical properties and robustness of the adaptive design as well as the credibility of trial results (if not done well). Although the timing and frequency of interim analyses are context-dependent (e.g., 1), the number of interim analyses should be feasible to implement, and they should be worthwhile. In practice, there is little benefit in performing a large number of interim analyses. For example, 1 to 2 interim analyses are most common in practice when early stopping is considered and rarely more than 4 or 5 are performed (see 2). However, there are some circumstances where more interim analyses can be useful provided the design remains feasible. For example, allocation probabilities can be automatically updated periodically (e.g., weekly or monthly) or after every patient provided it is worthwhile.

The timing and frequency of interim analyses and decision-making criteria influence the statistical properties and robustness of the adaptive design as well as the credibility of trial results (if not done well). Although the timing and frequency of interim analyses are context-dependent (e.g., 1), the number of interim analyses should be feasible to implement, and they should be worthwhile. In practice, there is little benefit in performing a large number of interim analyses. For example, 1 to 2 interim analyses are most common in practice when early stopping is considered and rarely more than 4 or 5 are performed (see 2). However, there are some circumstances where more interim analyses can be useful provided the design remains feasible. For example, allocation probabilities can be automatically updated periodically (e.g., weekly or monthly) or after every patient provided it is worthwhile.

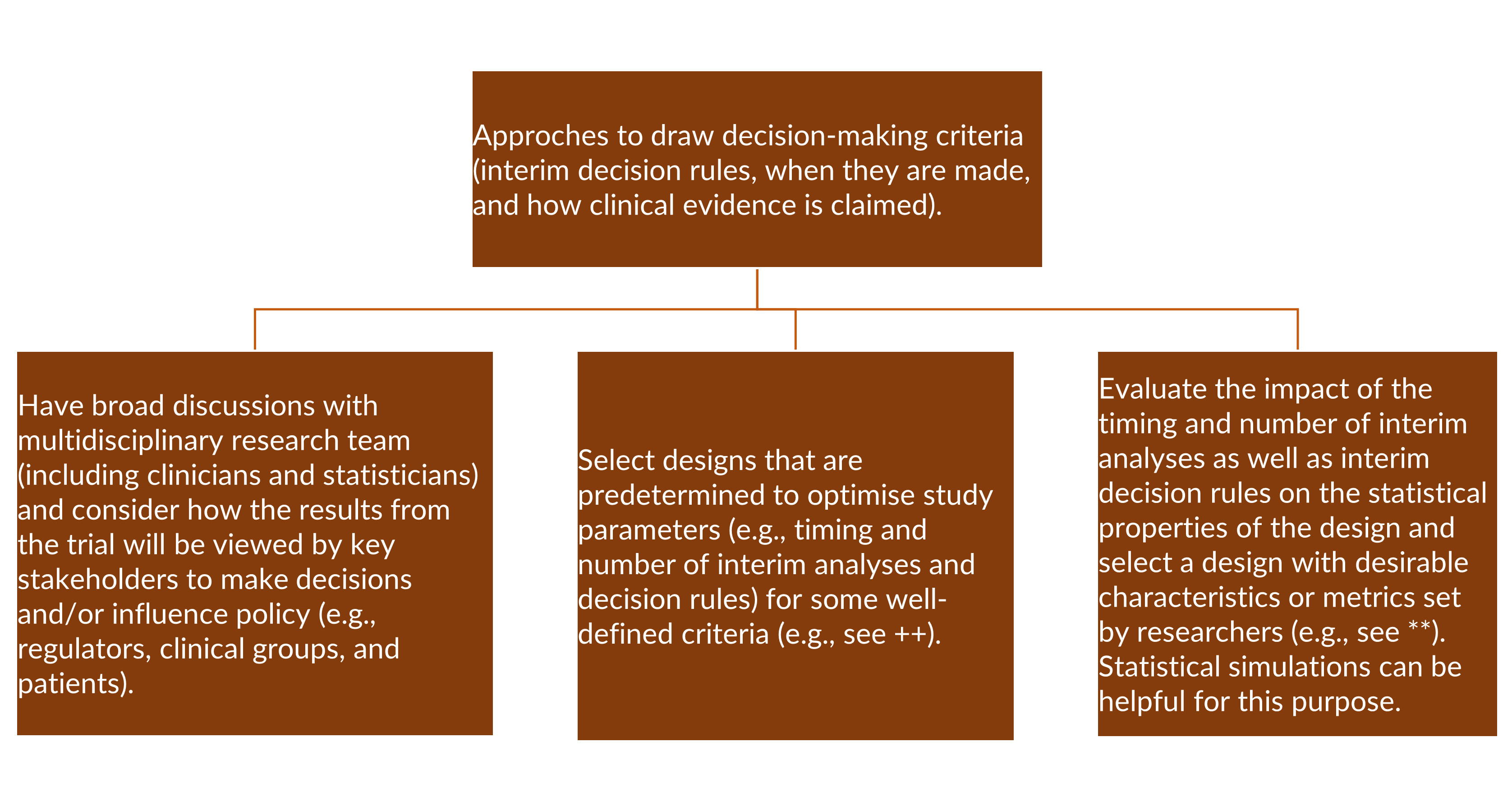

The first interim analysis should be chosen to ensure sufficient information is available to make reliable adaptation decisions. A review of group sequential trials 2 found that the median interim sample size (for observed events for time to event outcome) to early stopping was 65% (interquartile range 50% to 85%) relative to the planned maximum. On the other hand, undertaking interim analyses too late reduces the potential benefits of making trial adaptations. For example, it will be meaningless to stop the trial early when almost all participants have been recruited and treated as there will be smaller savings in terms of costs, patients recruited and time taken to address research questions. Interim analyses should be spaced to allow sufficient data to accrue between analyses. Some approaches to determine the number and timing of interim analysis are reflected in Figure 3.

++ (e.g., see 3, 4) and (** 1, 5, 6, 7, 8)

PANDA users may wish to read some useful examples illustrating the use of statistical simulation at the design stage to help researches optimise or decide the appropriate timing of interim analyses and decision rules to inform trial adaptations 9, 10.

PANDA users may wish to read some useful examples illustrating the use of statistical simulation at the design stage to help researches optimise or decide the appropriate timing of interim analyses and decision rules to inform trial adaptations 9, 10.

References

1. Benner et al. Timing of the interim analysis in adaptive enrichment designs. J Biopharm Stat. 2018;28(4):622-632.

2. Stevely et al. An investigation of the shortcomings of the CONSORT 2010 Statement for the reporting of group sequential randomised controlled trials: A methodological systematic review. PLoS One. 2015;10(11).

3. Bratton. Design issues and extensions of multi-arm multi-stage clinical trials. University College London. 2015.

4. Magirr et al. Flexible sequential designs for multi-arm clinical trials. Stat Med. 2014;33(19):3269-3279.

5. Hughes et al. Informing the selection of futility stopping thresholds: case study from a late-phase clinical trial. Pharm Stat. 8(1):25-37.

6. Blenkinsop et al. Assessing the impact of efficacy stopping rules on the error rates under the multi-arm multi-stage framework. Clin Trials. 2019;16(2):132-141.

7. Jiang et al. Impact of adaptation algorithm, timing, and stopping boundaries on the performance of Bayesian response adaptive randomization in confirmative trials with a binary endpoint. Contemp Clin Trials. 2017;62:114-120.

8. Hummel et al. Using simulation to optimize adaptive trial designs: applications in learning and confirmatory phase trials. Clin Investig. 2015;5(4):401–13.

9. Dimairo et al. Statistical simulation reports for the proposed trial designs to address research questions within the TipTop platform. The University of Sheffield. 2024.

10. Van Unnik et al. Development and evaluation of a simulation-based algorithm to optimize the planning of interim analyses for clinical trials in ALS. Neurology. 2023;100:E2398–408.

2. Stevely et al. An investigation of the shortcomings of the CONSORT 2010 Statement for the reporting of group sequential randomised controlled trials: A methodological systematic review. PLoS One. 2015;10(11).

3. Bratton. Design issues and extensions of multi-arm multi-stage clinical trials. University College London. 2015.

4. Magirr et al. Flexible sequential designs for multi-arm clinical trials. Stat Med. 2014;33(19):3269-3279.

5. Hughes et al. Informing the selection of futility stopping thresholds: case study from a late-phase clinical trial. Pharm Stat. 8(1):25-37.

6. Blenkinsop et al. Assessing the impact of efficacy stopping rules on the error rates under the multi-arm multi-stage framework. Clin Trials. 2019;16(2):132-141.

7. Jiang et al. Impact of adaptation algorithm, timing, and stopping boundaries on the performance of Bayesian response adaptive randomization in confirmative trials with a binary endpoint. Contemp Clin Trials. 2017;62:114-120.

8. Hummel et al. Using simulation to optimize adaptive trial designs: applications in learning and confirmatory phase trials. Clin Investig. 2015;5(4):401–13.

9. Dimairo et al. Statistical simulation reports for the proposed trial designs to address research questions within the TipTop platform. The University of Sheffield. 2024.

10. Van Unnik et al. Development and evaluation of a simulation-based algorithm to optimize the planning of interim analyses for clinical trials in ALS. Neurology. 2023;100:E2398–408.

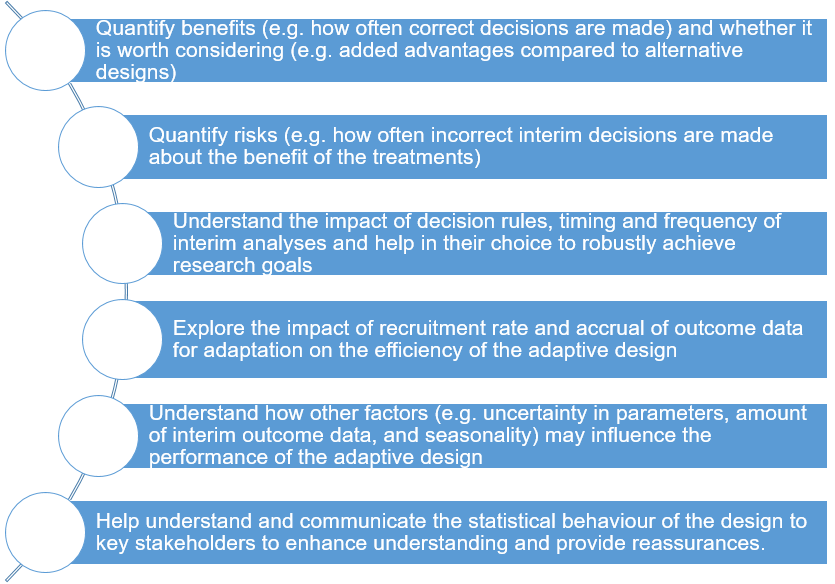

Assessing the operating characteristics of the adaptive design

The benefits and limitations of an adaptive design compared to some alternative design should be based on clearly defined and relevant metrics. It is essential to understand situations when the design is beneficial and when it may fail to meet the research goals. Assess the robustness of the adaptive design using available tools which can be analytical evaluations or simulations. In some situations, performing statistical simulation under a number of plausible scenarios is helpful to understand the behaviour of the proposed adaptive design (e.g., 4, 5) as well as whether it is feasible to implement. For example, simulation can help researchers to address some issues highlighted in Figure 4. In principle, existing general guidance (see 1, 2, 3) can be used to guide researchers to conduct statistical simulation when using adaptive designs.

It should be noted that not all adaptive designs require statistical simulation when superior analytical evaluation methods are available.

It should be noted that not all adaptive designs require statistical simulation when superior analytical evaluation methods are available.

References

1. Burton et al. The design of simulation studies in medical statistics. Stat Med . 2006;25:4279-4292.

2. Morris et al. Using simulation studies to evaluate statistical methods. Stat Med. 2019;38(11):2074-2102.

3. Mayer et al. Simulation practices for adaptive trial designs in drug and device development. Stat Biopharm Res. 2019;11(4):325–35.

4. Hummel et al. Using simulation to optimize adaptive trial designs: applications in learning and confirmatory phase trials. Clin Investig. 2015;5(4):401–13.

5. Mayer et al. Simulation practices for adaptive trial designs in drug and device development. Stat Biopharm Res. 2019;11:325–35.

2. Morris et al. Using simulation studies to evaluate statistical methods. Stat Med. 2019;38(11):2074-2102.

3. Mayer et al. Simulation practices for adaptive trial designs in drug and device development. Stat Biopharm Res. 2019;11(4):325–35.

4. Hummel et al. Using simulation to optimize adaptive trial designs: applications in learning and confirmatory phase trials. Clin Investig. 2015;5(4):401–13.

5. Mayer et al. Simulation practices for adaptive trial designs in drug and device development. Stat Biopharm Res. 2019;11:325–35.

Is the adaptive design feasible to implement?

At the planning stage, after determining that the adaptive design is a suitable choice to answer the research questions, it is essential to assess whether these adaptive features are feasible to implement during the trial. Not all adaptive designs are feasible to implement in practice even though on face value they may seem appropriate in a specific context. For troubleshooting, some feasibility assessment questions may include:

- can the maximum required sample size be achieved? An adaptive design may require more participants compared to a fixed sample size design, although there could be other advantages offered by the adaptive design;

- how do treatment duration, primary endpoints, and expected recruitment rate fit in with the proposed adaptive features? 2

- are the logistical aspects involved in running the trial manageable (e.g. treatment procurement and management)?

- is the infrastructure adequate to support the execution of the proposed adaptive features (e.g., statistical, clinical, trial management, and data management expertise)?

- is the planning time adequate to increase the chance of successful design and execution of the trial?

- how will regulatory aspects of the trial impact on the practicalities to implement the proposed adaptive features (e.g., time to obtain necessary approvals prior to commencement and during the trial such as when new treatment arms are added to an ongoing adaptive trial, see 1 for discussion)?

- what factors may delay interim decision making (e.g., time required to clean and analyse interim data, review interim analyses and convene a meeting of the independent data monitoring or adaptation committee)?

- can blinding be ensured, if relevant?

References

1. Schiavone et al. This is a platform alteration: A trial management perspective on the operational aspects of adaptive and platform and umbrella protocols. Trials. 2019;20:264.

2. Mukherjee et al. Adaptive designs: benefits and cautions for Neurosurgery trials. World Neurosurg. 2022;161:316–22.

2. Mukherjee et al. Adaptive designs: benefits and cautions for Neurosurgery trials. World Neurosurg. 2022;161:316–22.

Communicating the adaptive design effectively to stakeholders

Adaptive designs often require buy-in from multidisciplinary stakeholders such as:

- regulators,

- ethics committees,

- research team (e.g., clinicians, trial and data managers, and health economists),

- patients and the public,

- funders or sponsors,

- data monitoring or adaptation committee and trial steering committee 7.

The research team needs to understand the implications of the adaptive design on various aspects of running the trial and what is expected from them to successfully deliver. The data monitoring or adaptation committee, trial steering committee, ethics committees and regulators need to understand the adaptive design for them to be able to execute their roles and responsibilities. Effective communication of the adaptive design to all these key stakeholders is essential throughout the trial, especially at the planning/proposal development stage. It is advisable to engage regulators for advice very early on at the planning stage where relevant. There is growing regulatory guidance and papers reflecting on what regulators expect from researchers who are planning to use adaptive designs (e.g., 1, 2) as well as discussions on regulatory experiences on adaptive designs (e.g., 3, 4, 5, 6).

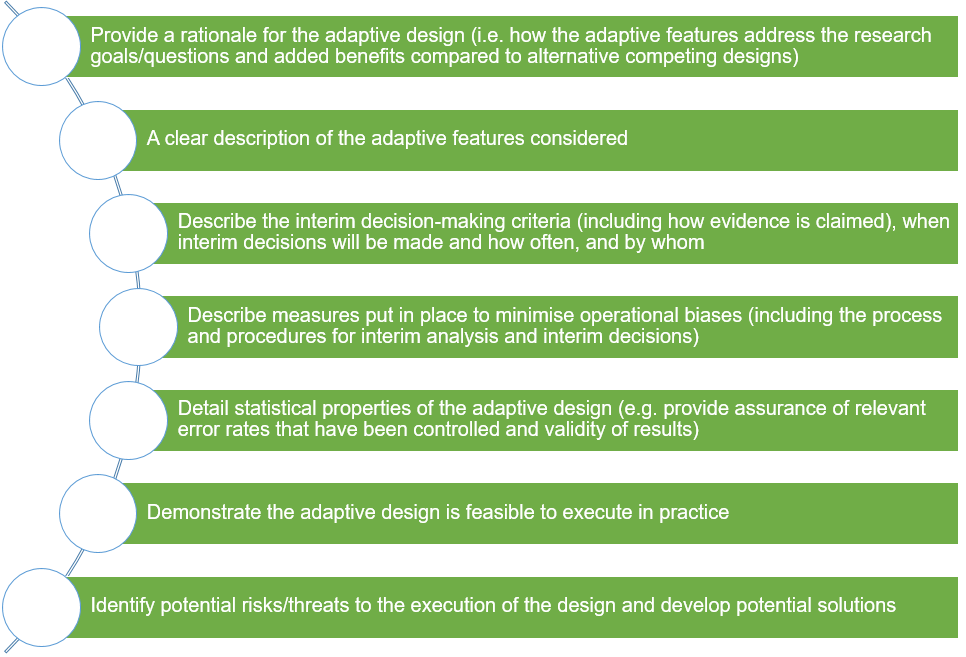

The communication of adaptive designs, especially in non-technical language, should be tailored to the needs of these targeted stakeholders depending on their roles. Figure 5 highlights some key details that should be effectively communicated.

References

1. FDA. Adaptive designs for clinical trials of drugs and biologics. Guidance for Industry. 2019.

2. CHMP. Reflection paper on methodological issues in confirmatory clinical trials planned with an adaptive design. 2007.

3. Elsäßer et al. Adaptive clinical trial designs for European marketing authorization: a survey of scientific advice letters from the European Medicines Agency. Trials. 2014;15(1):383.

4. Lin et al. CBER’s experience with adaptive design clinical trials. Ther Innov Regul Sci. 2015;50(2):195-203.

5. Collignon et al. Adaptive designs in clinical trials: from scientific advice to marketing authorisation to the European Medicine Agency. Trials. 2018;19(1):642.

6. Yang et al. Adaptive design practice at the Center for Devices and Radiological Health (CDRH), January 2007 to May 2013. Ther Innov Regul Sci. 2016;50(6):710-717.

7. Lane et al. A third trial oversight committee: Functions, benefits and issues. Clin Trials. 2020;17(1):106–12.

2. CHMP. Reflection paper on methodological issues in confirmatory clinical trials planned with an adaptive design. 2007.

3. Elsäßer et al. Adaptive clinical trial designs for European marketing authorization: a survey of scientific advice letters from the European Medicines Agency. Trials. 2014;15(1):383.

4. Lin et al. CBER’s experience with adaptive design clinical trials. Ther Innov Regul Sci. 2015;50(2):195-203.

5. Collignon et al. Adaptive designs in clinical trials: from scientific advice to marketing authorisation to the European Medicine Agency. Trials. 2018;19(1):642.

6. Yang et al. Adaptive design practice at the Center for Devices and Radiological Health (CDRH), January 2007 to May 2013. Ther Innov Regul Sci. 2016;50(6):710-717.

7. Lane et al. A third trial oversight committee: Functions, benefits and issues. Clin Trials. 2020;17(1):106–12.

Need for adequate planning and available resources

Researchers should never use adaptive designs as an excuse or remedy for poor planning. In general, adaptive designs require more planning time compared to non-adaptive designs (fixed trial designs), although this depends on the complexity of the proposed adaptive features. Most of this time is spent on agreeing on adaptive features, choosing interim decision-making criteria for implementing the proposed trial adaptations, understanding the practical or operational aspects, and assessing the statistical properties of the design, which often requires some simulation work. This work often depends on the input and agreement of various multidisciplinary stakeholders (e.g., agreeing on interim decision-making criteria and setting simulation scenarios).

Furthermore, the simulation results will need to be presented to and discussed with these stakeholders, and often fine-tuning of scenarios and repeating the process is required. Specifically, quality control involving another statistician to validate this work is required. All this time-consuming developmental work (often unfunded) needs to be done prior/during the grant proposal stage against the turnaround time for funding call deadlines. PANDA also highlights available statistical software resources to help researchers. The experience of various stakeholders on adaptive designs is also expected to improve in the near future. In summary:

Furthermore, the simulation results will need to be presented to and discussed with these stakeholders, and often fine-tuning of scenarios and repeating the process is required. Specifically, quality control involving another statistician to validate this work is required. All this time-consuming developmental work (often unfunded) needs to be done prior/during the grant proposal stage against the turnaround time for funding call deadlines. PANDA also highlights available statistical software resources to help researchers. The experience of various stakeholders on adaptive designs is also expected to improve in the near future. In summary:

- it is essential for researchers to reserve adequate planning time and to seek existing design support resources or funding opportunities to help with developmental work when considering complex adaptive designs;

- required resources to deliver the adaptive trial should be adequately costed (see costing of adaptive trials).

Costing of adaptive trials

All trials should be well-resourced and costed properly to deliver them successfully. Adaptive trials tend to require more resources to support them in comparison to non-adaptive trials (fixed trial designs), assuming they recruit the maximum sample size (which when considering resources needed, can be seen as a “worst case scenario”) 1. This is because adaptive designs bring additional considerations in both the planning and conduct stages, which depend on the proposed trial adaptations and their complexity. Several factors drive the costing of adaptive trials at different stages of the trial 1. There is guidance to help researchers estimate staff and non-staff resources required and cost them appropriately at the planning stage 2, which PANDA users are encouraged to read. This guidance outlines a five-step iterative process focusing on:

- understanding the tasks required to support the trial,

- mapping how trial adaptations affect the tasks required to support the trial,

- working out how these affected tasks impact the resources required,

- dealing with funders’ expectations on resourcing and managing research contracts,

- justifying resources to the funder and refining the design.

Here, we summarise some key resourcing considerations for adaptive trials at the planning stage. Other additional considerations that often impact resources and turnaround time (to meet research calls) at the design stage are discussed under the “need for adequate planning and available resources”.

1) Impact of trial adaptations on uncertainty around the trial pathway

In adaptive trials, there are several pathways the trial may take depending on the trial adaptations triggered and acted upon. As such, the sample size(s) (or duration of participant follow-up to observe certain outcome events) required will typically not be known in advance. This makes the costing of adaptive trials by researchers and planning of funding models by research funders/sponsors more challenging. However, it is good practice to specify possible sample sizes based on scenarios of trial pathways and use these to estimate the resources required. The maximum resourcing required can then be communicated to the funder as a “worst case scenario”. Estimating resources based on the minimum sample size (best case scenario) and some scenarios in between the minimum and maximum sample sizes can also be useful, particularly if each scenario has well-defined probabilities of occurring (e.g., stopping at specified interim analyses).

2) Impact of interim analyses on staff resources

Adaptive designs require interim analyses. Additional resources are usually required to support these interim analyses depending on their frequency, nature of trial adaptations considered, and complexity of the adaptive design. For example, additional statisticians with expertise in adaptive designs are required to develop the interim and final statistical analysis plans, undertake interim and final analyses including related quality controls, and support the trial throughout to final reporting. This may also apply to health economists depending on the trial design. In some cases, an external organisation or personnel may be required to conduct interim analyses to maintain the blinding of trial statisticians 3 and the research team, and to preserve the confidentiality of interim results (see measures to minimise operational biases for details).

Some complex adaptive trials tend to increase the time required for trial management. Additional resources are often needed to set up an adaptive trial, negotiate contracts (e.g., with treatment suppliers and recruiting sites), develop trial protocol(s) and participant information sheets (PIS), train site staff, and engage stakeholders to understand the adaptive design aspects and wide implications (e.g., data monitoring and trial steering committees, sponsor, public and patient representatives – see details on communicating the adaptive design effectively to stakeholders). There are additional data management tasks (at recruiting sites and centrally) to achieve timely and robust interim analyses and decisions, and implementation of adaptations (see data management considerations for adaptive trials for details).

Some trial adaptations (e.g., change in participant eligibility in adaptive population enrichment (APE) designs or addition of new treatment arm(s)) may also impact trial governance necessitated by amendments to the trial protocol(s), PIS, and (electronic) case report forms ((e)CRFs) as well as additional training of site staff and monitoring of recruiting sites.

3) Impact of trial adaptations on non-staff resources

The impact of trial adaptation decisions that may be triggered at interim analyses on trial conduct and resources should be explored at the planning stage. For example, dropping or adding new treatment arms, updating how participants are allocated to favour promising treatments, or refining participant eligibility by dropping certain subgroups require randomisation methods, data management plans, or information systems that can cope with the proposed design features. Bespoke or new systems/infrastructure may be required to cope with such trial adaptations. For example, in the TRICEPS trial (NIHR EME funded 3-arm 2-stage design with treatment selection), a validated randomisation system was developed to implement a minimisation algorithm that accommodated multiple study treatments with options to drop futile treatment arms. Resources to develop this randomisation system, which could be reused in future trials, were costed into the grant application.

4) Impact of trial adaptations on staff resources

Some adaptations (e.g., early trial stopping) may reduce the trial duration and resources required. As a result, staffing issues may arise depending on the size of the organisational research portfolio and contractual arrangements 1, 4. For example, how to deal with staff research contracts and how any leftover research funds will be spent if a trial is stopped early should be considered and agreed with the funder and sponsor where necessary. Some UK research funders have developed models to address this 2.

5) Justifying research costs to the funder

Many funders (e.g., the NIHR and MRC) review whether submitted research grant applications offer good value for money for funding based on several metrics and not solely on total cost. Such metrics include the importance of the research question(s); suitability and robustness of the proposed design and methods; whether appropriate stakeholders impacted by the research have been consulted, or are partners in research delivery (e.g., patients and public representatives and clinical groups); whether the research team has the right expertise; research feasibility; and whether costed resources have been justified and are affordable.

It is, therefore, essential to justify the trial adaptations proposed and their potential value in addressing the research question(s) (see communicating the adaptive design effectively to stakeholders for details). Moreso, the costs of requested resources should be clearly explained in grant applications, focusing on the research tasks and impacts of the trial adaptations considered.

References

1. Wilson et al. Costs and staffing resource requirements for adaptive clinical trials: quantitative and qualitative results from the Costing Adaptive Trials project. BMC Med. 2021;19:1–17.

2. Wason et al. Practical guidance for planning resources required to support publicly-funded adaptive clinical trials. BMC Med. 2022;20:1–12.

2. Wason et al. Practical guidance for planning resources required to support publicly-funded adaptive clinical trials. BMC Med. 2022;20:1–12.

3. Iflaifel et al. Blinding of study statisticians in clinical trials: a qualitative study in UK clinical trials units. Trials. 2022;23:1–11.

4. Dimairo et al. Missing steps in a staircase: a qualitative study of the perspectives of key stakeholders on the use of adaptive designs in confirmatory trials. Trials. 2015;16:430.

Availability of relevant expertise to design and run the trial

The additional level of expertise required varies depending on the complexity of the proposed adaptive features. Running adaptive trials requires close collaboration between researchers with both statistical and non-statistical expertise (e.g., clinical and trial management), ideally with some previous experience on adaptive trial projects. This encompasses the design and execution as well as those involved in the oversight of the trial. Specifically, adaptive designs require special statistical considerations to account for the adaptive features in the design, implementation of adaptations, and interim and final analyses. For instance, special methods are often required to control or evaluate characteristics of the design and estimation of treatment effects as well as uncertainty and other quantities reliably (see 1 for discussion).

PANDA aims to support researchers and bridge the existing practical knowledge gap. Some open access practical tutorial papers are also available (e.g., see 1, 2).

PANDA aims to support researchers and bridge the existing practical knowledge gap. Some open access practical tutorial papers are also available (e.g., see 1, 2).

References

1. Pallmann et al. Adaptive designs in clinical trials: why use them, and how to run and report them. BMC Med. 2018;16(1):29.

2. Burnett et al. Adding flexibility to clinical trial designs: an example-based guide to the practical use of adaptive designs. BMC Med. 2020;18(1):352.

2. Burnett et al. Adding flexibility to clinical trial designs: an example-based guide to the practical use of adaptive designs. BMC Med. 2020;18(1):352.

Ethical considerations

All trials must be conducted in line with ethics requirements. Patients must provide informed consent after being given adequate information about the trial for them to be able to make an informed choice on whether to take part without being misled. In adaptive designs, circumstances may change as a result of adaptation decision made (e.g., more patients may be required, some treatment arms may be stopped early, the patient population may change). Thus, ethics committees need to understand the adaptive design including the advantages, possible adaptation decisions and their implications on patient care, and what information is given to participants prior to consent and during the trial.

Some of the ethics committee members may be unaware of the adaptive design being considered so effective communication is essential. In addition, researchers should also consider the following:

Some of the ethics committee members may be unaware of the adaptive design being considered so effective communication is essential. In addition, researchers should also consider the following:

- carefully strike a balance between the information they give to participants (e.g., in participant information sheets) and the need to minimise potential biases that could be introduced by disclosing too much information. For instance, consider whether information disclosed may influence participants who are recruited earlier to be systematically different from those enrolled later;

- develop patient information sheets in a way that explains the implications of adaptation decisions (and to minimise the delay in implementing adaptation decisions so revised ethics approval is not required);

- address how participants’ care may need to change because of adaptation decisions and their clinical management;

- address how data of participants that have been treated and did not contribute to interim analysis (overrun patients) before a decision to stop a treatment arm early will be handled during analysis (see 1).

References

1. Baldi et al. Overrunning in clinical trials: some thoughts from a methodological review. Trials. 2020;21(1):668.

Why pre-planning and documentation is essential

Regardless of the trial design, ad hoc (unplanned) changes to an ongoing trial may raise suspicions that can undermine the credibility and validity of the results. This concern is elevated when researchers have access to accumulating outcome data. In adaptive designs, to mitigate this concern, we prespecify possible adaptations that can be made including the decision-making criteria, when decisions are made and how often, on what basis the adaptations will be made, and measures put in place to minimise any potential operational biases. However, it is possible that unplanned changes may also occur during an adaptive trial. It is therefore important for readers of trial reports to be able to distinguish between planned changes (which are features of the adaptive design) and unplanned changes (that can happen in any adaptive or non-adaptive trial). Thus, it is essential to document all important aspects of the adaptive design in trial documents as evidence of pre-planning; for example, in the:

- trial protocol and,

- prespecified statistical analysis plan.

Importantly, this prespecification enhances adequate planning and also the evaluation of the operational and statistical properties of the adaptive design.

Some key sensitive details of the design that may introduce biases when publicly disclosed may be documented in restricted study documents to be disclosed when the trial is complete. For instance, the actual formulae used for computing certain quantities (e.g., revised sample size) may be restricted to avoid others from working backwards to guess the emerging treatment effect which can cause bias in the conduct of the trial.

Some key sensitive details of the design that may introduce biases when publicly disclosed may be documented in restricted study documents to be disclosed when the trial is complete. For instance, the actual formulae used for computing certain quantities (e.g., revised sample size) may be restricted to avoid others from working backwards to guess the emerging treatment effect which can cause bias in the conduct of the trial.

Health economic considerations

Summary

- Like in fixed trial designs, trials that use adaptive designs tend to have multiple objectives and collect data on more than just the primary outcome;

- Trial adaptations can impact secondary objectives and failing to account for potential biases could result in incorrect decision-making potentially wasting resources, preventing patients from receiving effective treatments, or unintentionally harming patients;

- Some adaptive trials are designed with cost-effectiveness objectives alongside clinical effectiveness, and these can be used to inform the trial design as well as interim decision-making;

- It is important to consider these secondary outcomes when planning, conducting, and reporting an adaptive design clinical trial, and;

- Researchers need to be aware of the additional considerations required when using an adaptive design with a health economic analysis.

Motivation

Countries have limited budgets for funding healthcare treatments and health research. Conducting efficient research is a priority 1. Research funders, such as the UK’s National Institute for Health Research (NIHR) and the Canadian Institutes of Health Research, need to decide how best to allocate resources. To do this they consider how the research will enhance clinical decisions and how much it will cost. In publicly funded healthcare settings, health technology adoption agencies, such as the National Institute for Health and Care Excellence (NICE), UK and the Pharmaceutical Benefits Advisory Committee, Australia, make decisions about which healthcare technologies (e.g., drugs, devices and other healthcare interventions) should receive funding. In the UK, these treatments are then made available to patients through the National Health Service (NHS). Technology adoption decisions are informed by evidence of both clinical and cost-effectiveness 2.

As illustrated in Figure 1, cost-effectiveness plays an important role in the allocation of health resources for both treatments and research. Before the trial begins, funding panels will consider whether a research proposal provides value for money, balancing what is already known, how much can be learnt from the research, and how much it will cost. Value of information analysis methods (described below) provide a framework for quantifying the cost-effectiveness of research 3.

Increasingly clinical trials are used to collect data to inform an economic evaluation following the trial 4. This may include clinical data on primary and secondary outcomes, which can be used to estimate parameters in a health economic model, or costs and quality of life data collected directly from trial participants 5. The economic evaluation helps decision makers decide whether it is worthwhile funding in clinical practice.

In summary: As adaptive clinical trials are increasingly used, it is more likely that they will also be used as part of a value of information analysis or an economic evaluation to inform research budget allocation or treatment adoption decisions. Therefore, it is vital that researchers understand the potential for an adaptive design to impact health economic analyses and to explore opportunities to consider health economics and adaptive designs together.

In summary: As adaptive clinical trials are increasingly used, it is more likely that they will also be used as part of a value of information analysis or an economic evaluation to inform research budget allocation or treatment adoption decisions. Therefore, it is vital that researchers understand the potential for an adaptive design to impact health economic analyses and to explore opportunities to consider health economics and adaptive designs together.

Establishing the cost-effectiveness of research – value of information analysis (VOIA)

Healthcare decision makers are often faced with uncertainty when choosing which treatments to fund, and there is always a risk that better outcomes could have been achieved if an alternative decision had been made 6. Collecting additional information can reduce uncertainty in decisions 7, 8. However, this additional information often comes at a cost; for example, studying participants in a clinical trial can be costly, but will help researchers to learn more about the benefits of a treatment. The methods of VOIA provide a framework for quantifying the value of learning more information by balancing the benefits with the costs 3. This can be viewed as the returns we get from investing in conducting further research.

There are several methods to conducting a VOIA which are summarised here and PANDA users may wish to read these papers for a comprehensive introduction 6, 9:

a) Expected value of perfect information (EVPI)

There are several methods to conducting a VOIA which are summarised here and PANDA users may wish to read these papers for a comprehensive introduction 6, 9:

a) Expected value of perfect information (EVPI)

The EVPI considers the scenario where further research would eliminate all decision uncertainty, representing the most that can be gained from further research 10, 11, 12. It is potentially worthwhile conducting further research if the associated costs of conducting the proposed research are less than the EVPI 13.

b) Expected value of partially perfect information (EVPPI)

The EVPPI considers the value of eliminating all decision uncertainty for specific parameters that inform the decision. For example, eliminating all uncertainty about the costs of a treatment 6.

c) Expected value of sample information (EVSI)

As highlighted above, EVPI estimates the value of eliminating all uncertainty in a decision problem. It may, however, be more feasible to consider the value of reducing some of the uncertainty; for example, by conducting another clinical trial. The EVSI estimates the value of a specific research design that will produce results to inform a decision 15.

d) Expected net benefit of sampling (ENBS)

The ENBS is the EVSI at the population level minus the cost of conducting the proposed research, known as the cost of sampling 16. When the ENBS is greater than zero this suggests that it is worthwhile conducting the proposed research. The ENBS can be calculated for a range of research trial designs and the design with the highest ENBS is considered optimal from a health economic perspective 17. This has been extended in the context of the design of a group sequential trial 18.

b) Expected value of partially perfect information (EVPPI)

The EVPPI considers the value of eliminating all decision uncertainty for specific parameters that inform the decision. For example, eliminating all uncertainty about the costs of a treatment 6.

c) Expected value of sample information (EVSI)

As highlighted above, EVPI estimates the value of eliminating all uncertainty in a decision problem. It may, however, be more feasible to consider the value of reducing some of the uncertainty; for example, by conducting another clinical trial. The EVSI estimates the value of a specific research design that will produce results to inform a decision 15.

d) Expected net benefit of sampling (ENBS)

The ENBS is the EVSI at the population level minus the cost of conducting the proposed research, known as the cost of sampling 16. When the ENBS is greater than zero this suggests that it is worthwhile conducting the proposed research. The ENBS can be calculated for a range of research trial designs and the design with the highest ENBS is considered optimal from a health economic perspective 17. This has been extended in the context of the design of a group sequential trial 18.

It is important to note that EVPI, EVPPI, EVSI and ENBS methods are about eliminating uncertainty in the health economic model parameters. Thus, if the economic model is wrong, its structure is wrong, or excludes important parameters, all these inherent calculations will also be wrong. PANDA users may wish to read more about how the EVPI, EVPPI, EVSI and ENBS are calculated (see 9, 14).

The use of value of information analysis (VOIA) in practice

In the UK, the use of VOIA has been slowly increasing in healthcare decision-making. However, it is not routinely used by researchers to justify the need for research in grant applications for funding or by funders to assess value for money of the proposed research in their decision-making process 19, 20. Although there are numerous articles describing the methods, there are few real life applications 21. Barriers to their routine use include their complexity, high computational burden, and areas of methodological contention around the effective population and time horizon used in calculations. To overcome the perceived barriers the Collaborative Network for Value of Information (ConVOI) have developed resources to help researchers (see 22, 23). An ISPOR task force has developed good practice guidance for using VOIA methods in health technology assessments and to inform the prioritisation of research (see 6, 9).

Additional resources for conducting VOIA include the Rapid Assessment of Need for Evidence (RANE) tool that helps researchers to calculate the value of their research proposals quickly so that it can be included in the research funding application without the need for resource intensive methods 24. This R Shiny application requires inputs from the researcher relating to their primary outcome, interventions and aspects of the proposed research. The Sheffield Accelerated Value of Information (SAVI) R Shiny application also aids the calculation of the EVPI and EVPPI 25.

Economic evaluation: establishing the cost-effectiveness of a treatment

An economic evaluation is a “comparative analysis of alternative courses of action in terms of both their costs and consequences” 5. There are different approaches to conducting an economic evaluation and PANDA users may wish to read the comprehensive text 5.

A cost-utility analysis is the preferred approach for several healthcare decision-making bodies including NICE in the UK, Canada, and Australia 2, 26, 27. A cost-utility analysis compares the costs and benefits of competing treatments. Benefits are measured as quality adjusted life years (QALYs, see item 2 below) that provide a summary of the gain of quality and quantity of life a treatment provides.

1) Costs

The key steps when calculating the costs of a treatment include identification, measurement, and valuation 28:

- Identification – relates to what costs should be included in the analysis. This will depend on the perspective of the analysis such as societal, patient, employer, and healthcare provider. In the UK, NICE stipulates that economic evaluations should use an NHS and personal and social services perspective 2. This should include all costs incurred by the NHS in the delivery of the treatment under evaluation;

- Measurement – relates to how much of each cost is incurred. This might be estimated using a micro-costing study, case report forms during a clinical trial or from existing data;

- Valuation – relates to the value associated with each cost. Each resource is valued using sources such as the Personal Social Services Research Unit (PSSRU) document of up-to-date unit costs for a range of health and social care services in England 29 and the British National Formulary (BNF) for drug costs 30.

2) Quality adjusted life years (QALYs)

In a cost-utility analysis, benefits are commonly measured using the QALY 2. A QALY is a measure of both the quantity and quality of life gained 5. The QALY is a ‘common currency’ that allows decision-makers to compare the benefits of a variety of interventions, across a range of disease areas 31. The QALY is calculated by multiplying the amount of time spent in a health state by its utility. Utility quantifies the quality of life experienced in a particular health state and is anchored between zero and one, where one represents full health and zero represents death. It is possible for utility values to fall below zero representing health states thought to be worse than death 32.

In the UK, NICE prefers utilities to be measured using the EQ-5D, a measure of health that does not depend on a specific illness, condition, or patient population 33. During a clinical trial, participants are often asked to complete the EQ-5D at several time points during the study to describe their quality of life at that given time. Their responses give a five-level descriptive health state, for example, 11111 describes someone in perfect health. The descriptive health state is then converted to a utility score based on existing algorithms for the population of interest. Currently, NICE recommends the five level health states are converted to a utility score based on a previous three level version of the EQ-5D 34, 35. QALYs are then calculated by multiplying utility scores by time.

Incremental analysis and healthcare decision-making

In a health economic analysis, two or more competing treatments are compared in an incremental analysis 5. Two key summary statistics are the incremental cost-effectiveness ratio (ICER) and the incremental net benefit (INB). The ICER divides the difference in costs between the two treatments (known as the incremental costs) by the difference in the benefits between the two treatments (known as the incremental benefits), such as QALY.

In a health economic analysis, two or more competing treatments are compared in an incremental analysis 5. Two key summary statistics are the incremental cost-effectiveness ratio (ICER) and the incremental net benefit (INB). The ICER divides the difference in costs between the two treatments (known as the incremental costs) by the difference in the benefits between the two treatments (known as the incremental benefits), such as QALY.

The INB is the difference in net benefit of each treatment, where the net benefit of a treatment is the difference between the benefits (QALY) converted to a monetary scale and costs. Benefits are converted onto a monetary scale by multiplying them by a willingness to pay threshold 36. This threshold value represents the willingness of the decision-maker to pay for a treatment and is the additional amount they are prepared to pay for one more unit of benefit, here an additional QALY 5. In the UK, the current threshold value used by NICE is considered to be £20,000 to £30,000 per QALY gained 2.

If the INB is greater than zero the intervention is deemed cost-effective and if it is less than zero it is not deemed cost-effective. If the ICER is less than the willingness to pay threshold the intervention is deemed cost-effective and if it is greater than the threshold it is not deemed cost-effective.

Within-trial or trial-based analysis

It is increasingly common for economic evaluations to be ‘piggybacked’ onto a clinical trial where information, such as costs and health related quality of life, is collected from patients during the trial 5, 37, 38. The differences in mean costs and mean QALYs between competing treatments can be estimated from the trial data to calculate an ICER and INB. This then provides evidence of the cost-effectiveness of the treatment when delivered to patients over the duration of the trial.

It is increasingly common for economic evaluations to be ‘piggybacked’ onto a clinical trial where information, such as costs and health related quality of life, is collected from patients during the trial 5, 37, 38. The differences in mean costs and mean QALYs between competing treatments can be estimated from the trial data to calculate an ICER and INB. This then provides evidence of the cost-effectiveness of the treatment when delivered to patients over the duration of the trial.

In context: The HubBLE trial was a multicentre, open-label, parallel-group randomised controlled trial that assessed the clinical and cost-effectiveness of haemorrhoidal artery ligation (HAL) compared with rubber band ligation (RBL) in the treatment of symptomatic second-degree and third-degree haemorrhoids 39. The trial included a within-trial 40, cost-utility analysis that found the incremental mean total cost per patient was £1,027 for HAL compared to RBL and incremental QALYs were 0.01 QALYs. This gave an ICER of £104,427 per QALY suggesting that HAL was not cost-effective compared to RBL when considering a willingness to pay threshold of £20,000 per QALY gained.

Model-based analysis

A health economic model is used to describe the relationship between a number of inputs including the clinical effectiveness of the intervention, quality of life data, and costs. Unlike the within-trial analysis, information can be synthesised from a range of sources external to the trial 5. The model can be used to supplement a within-trial analysis such as extrapolating beyond the end of the clinical trial 41. Modelling can also be used when a trial is not conducted to compare treatments of interest using evidence synthesis methods 42, 43. Several approaches can be used in a model-based health economic analysis; see summary for more details 44.

A health economic model is used to describe the relationship between a number of inputs including the clinical effectiveness of the intervention, quality of life data, and costs. Unlike the within-trial analysis, information can be synthesised from a range of sources external to the trial 5. The model can be used to supplement a within-trial analysis such as extrapolating beyond the end of the clinical trial 41. Modelling can also be used when a trial is not conducted to compare treatments of interest using evidence synthesis methods 42, 43. Several approaches can be used in a model-based health economic analysis; see summary for more details 44.

In context: The Big CACTUS clinical trial assessed the clinical and cost-effectiveness of computerised speech and language therapy (CSLT) or attention control (AC) added to usual care (UC) for people with long-term post-stroke aphasia 45, 46. A model-based cost-utility analysis was used to examine the long-term cost-effectiveness of CSLT compared to AC and UC. Evidence used from the Big CACTUS trial included estimates of clinical effectiveness and incremental cost and benefits, and evidence external to the trial included post-stroke death rates and age-related mortality risks 47. A Markov model illustrated in Figure 1 (of 47) was used. The cost-effectiveness analysis concluded that CSLT is unlikely to be cost-effective in the whole population considered but could be cost-effective for people with mild or moderate word finding abilities 47.

Adaptive Clinical Trials and Health Economics

Increasingly clinical trials are used to collect information to inform a health economic analysis following the trial 4. This may include clinical data on primary and secondary outcomes to inform parameters in a health economic model (see model-based analysis above) or costs and quality of life data collected directly from participants during the trial. As the use of adaptive designs increases in practice, they are likely to contribute more to health economic analyses and it is important to understand the impact adaptive designs have on these analyses 48. Additionally, opportunities are potentially being missed to incorporate health economic considerations into the design and analysis of adaptive designs; for example, at interim decision-making. Failing to consider these issues when planning and conducting an adaptive trial could waste resources and result in unreliable evidence for decision-making.

When using an adaptive design, researchers should consider:

- what impact do trial adaptations , such as early trial stopping, have on health economic analyses;

- what statistical adjustments are required to ensure reliable healthcare decisions can still be made;

- how health economics can be incorporated into the design and/or analyses to inform adaptations to improve efficiency in clinical trials (e.g. to decide whether further sampling is required after an interim analysis using the methods described above);

- the practical steps that will enhance successful implementation of clinical trials with an adaptive design and health economic analysis.

In context: The Big CACTUS clinical trial was designed as a fixed sample size (non-adaptive) trial. There was still considerable uncertainty at the end of the trial around the cost-effectiveness of the computerised speech and language therapy (CSLT) 47. This makes it challenging for decision makers to decide whether CSLT should be recommended for use in practice. Had Big CACTUS used an adaptive design and stopped early based on a clinical effectiveness analysis using interim data this would have provided even less data for the health economic analysis. As such this could have created greater uncertainty in the cost-effectiveness results. This may have resulted in further research being required to address these uncertainties before decisions around the adoption of CSLT into practice can be made. This highlights how failing to adequately consider the importance of cost-effectiveness considerations during an adaptive design could diminish the potential benefits of using the adaptive design.

1) Planning and design

Researchers should consider early in the design and planning of the adaptive design whether a health economic analysis is required and if so, what role it should play, at what stage (e.g., design, interim analysis, and/or final analysis) and what impact the design will have on the analyses.

Points to consider include:

- whether it is appropriate to seek the advice of the trial funder, sponsor and technology adoption agency (such as the NIHR, MHRA and NICE) as well as members of the public when determining the role of health economics in a specific trial. This will ensure the requirements of these key stakeholders are met before the trial begins and avoids potentially wasting resources on a trial design that is not acceptable to patients or does not provide the required information for healthcare decision-making and adoption of the treatments into practice 49.

- what resources are required to adequately implement the chosen design? These resources may need to be available to the research team before the trial is funded. This may have implications for the type of design that can be considered. For example, if a health economic model is required to inform the design of the trial, this may not be feasible unless there is an existing model that can be used or adapted. See costing of adaptive trials on related discussion.

- what additional resources will be required to adequately implement the chosen design? This may include resources for additional health economic analyses during the trial or additional health economic expertise in the design and conduct of the trial. While this may not represent an increase in the total resources required, there may be a shift in when health economic resources are required. For example, moving predominantly from the end of the trial to throughout the trial if health economics is assessed at interim analyses.

- consideration of the potential impact of the trial adaptations and interim decisions on the health economic analyses and conclusions. For example, does a decision to stop the trial early because there is sufficient evidence to conclude that the study treatment is clinically effective hinder the ability to answer cost-effectiveness questions reliably to the extent that another trial will be required? It is possible that having a slightly longer delay until the first interim analysis could still result in a meaningful health economic analysis should the trial stop at this stage.

Currently, a few clinical trials with an adaptive design and health economic analysis consider opportunities to incorporate health economics into the design and conduct 48. However, taking this approach could increase the efficiency of clinical trials and help research funders allocate their limited funding more efficiently across their portfolio of research. Additionally, given the key role of cost-effectiveness in healthcare decision-making, it is important to link the design and conduct of a trial to the ultimate adoption decision to prevent resources being wasted. PANDA users should read ENACT project outputs for further information 56.

The role of health economics in an adaptive design can be tailored to suit the needs of stakeholders and their willingness to apply this innovative approach in their context. Approaches include (see 49 for more suggestions):

The role of health economics in an adaptive design can be tailored to suit the needs of stakeholders and their willingness to apply this innovative approach in their context. Approaches include (see 49 for more suggestions):

- using early examinations of the trial to check all health economic data are being collected as required and/or to update a health economic model.

- pre-specifying a hierarchy of interim decision rules where any trial adaptations are informed first by clinical outcomes and then, where appropriate, information from a health economic analysis is used to supplement or support adaptations made.

- not using interim health economic analyses alone to inform terminal decisions at an interim analysis, for example, stopping the trial early or dropping a treatment arm. However, interim health economic analyses could be used alone to increase the duration of the trial or to retain a treatment arm.

- only performing health economic analyses after sufficient data have been collected on key clinical outcomes. For example, if there are four planned interim analyses, adaptations at the first two interim analyses may only be informed by clinical outcomes. If the trial progresses to the third and fourth interim analyses adaptation may then be informed by health economic analyses. This may help to address stakeholder concerns that a trial may stop based on early cost-effectiveness evidence before clinical effectiveness evidence is clear and also ensure that sufficient information has been collected for a precise health economic analysis.

Using health economics to guide the choice of trial design, considering both fixed sample size (non-adaptive) designs and adaptive designs 18, 50.

In context – the use of VOIA at interim analyses to inform the decision to continue a trial: The PRESSURE-2 trial aimed to determine the clinical and cost-effectiveness of high specification foam and alternating pressure mattresses for the prevention of pressure ulcers (PUs) 51. This was a randomised, multicentre, phase 3, open-label, parallel-group, adaptive group sequential trial with two interim analyses using double-triangular stopping rules 52. The authors also included health economics as part of the design with an ENBS calculation (described above under VOIA section) at an interim analysis. This analysis proposed using the interim data to determine whether it is cost-effective to continue with the trial from an NHS decision makers’ perspective.

The primary outcome was time to developing a new category 2 or above PU (from randomisation to 30 days from the end of treatment). A total of 2954 patients were required to give a maximum of 588 events to detect a 5% difference of primary endpoint events (a hazard rate of 0.759) assuming a control incidence rate of 23%, to preserve a 90% power for a two-sided test accounting for a 6% dropout rate. The first planned interim analysis was due to be conducted after 300 events. It was noted that this would give the minimum number of events required for the economic analysis 53, showing how consideration was given to the impact of potential adaptations beyond the primary outcome. However, after approximately 12-months the observed recruitment rate was slower than anticipated. An unplanned VOIA was conducted at the request of the funder (NIHR HTA Programme) to inform whether the trial should continue. This analysis calculated the ENBS using the estimated costs of running trials with different sample sizes and their expected net present value of sampling information. The trial continued, informed by these results, with a second VOIA conducted approximately 28-months into the trial in conjunction with an unplanned interim analysis of clinical effectiveness. The use of VOIA to inform the continuation of the trial highlights the potential for the cost-effectiveness of the research to be used during adaptive clinical trials. The authors provide a detailed description of the methods and results of each of these analyses in the trial report allowing other researchers to understand and critique their approach 53.

In context – the use of VOIA at interim analyses to inform the decision to continue a trial: The PRESSURE-2 trial aimed to determine the clinical and cost-effectiveness of high specification foam and alternating pressure mattresses for the prevention of pressure ulcers (PUs) 51. This was a randomised, multicentre, phase 3, open-label, parallel-group, adaptive group sequential trial with two interim analyses using double-triangular stopping rules 52. The authors also included health economics as part of the design with an ENBS calculation (described above under VOIA section) at an interim analysis. This analysis proposed using the interim data to determine whether it is cost-effective to continue with the trial from an NHS decision makers’ perspective.

The primary outcome was time to developing a new category 2 or above PU (from randomisation to 30 days from the end of treatment). A total of 2954 patients were required to give a maximum of 588 events to detect a 5% difference of primary endpoint events (a hazard rate of 0.759) assuming a control incidence rate of 23%, to preserve a 90% power for a two-sided test accounting for a 6% dropout rate. The first planned interim analysis was due to be conducted after 300 events. It was noted that this would give the minimum number of events required for the economic analysis 53, showing how consideration was given to the impact of potential adaptations beyond the primary outcome. However, after approximately 12-months the observed recruitment rate was slower than anticipated. An unplanned VOIA was conducted at the request of the funder (NIHR HTA Programme) to inform whether the trial should continue. This analysis calculated the ENBS using the estimated costs of running trials with different sample sizes and their expected net present value of sampling information. The trial continued, informed by these results, with a second VOIA conducted approximately 28-months into the trial in conjunction with an unplanned interim analysis of clinical effectiveness. The use of VOIA to inform the continuation of the trial highlights the potential for the cost-effectiveness of the research to be used during adaptive clinical trials. The authors provide a detailed description of the methods and results of each of these analyses in the trial report allowing other researchers to understand and critique their approach 53.

2) Conduct and analysis

There are specific considerations that researchers should think about to inform the high-quality conduct and analysis of an adaptive design clinical trial with a health economics analysis.

Proposed health economic analyses should be outlined in a Health Economic and Decision Modelling Analysis Plan (HEDMAP) ideally before the recruitment of the first patient. Like in fixed design trials, it is crucial to maintain the validity and integrity of adaptive trials that use health economics. The HEDMAP should include a description of the role of health economics in adaptations and pre-specified methods used at interim and final analyses 54, 55.

In adaptive designs where health economics is not incorporated into the design or interim decision making there is still potential for the adaptive nature of the trial to impact the health economic analysis following the trial. For example, bias in health economics results can be substantial when a group sequential trial is stopped early unless appropriate bias correction methods are applied to treatment effect estimates. Extent of bias depends on several factors (see Figure 2) that include how correlated the health economic outcomes are with the primary outcome (or outcomes used to inform adaptations) as well as timing of interim analysis, type of stopping rule used, and whether a model-based or a within-trial analysis is used 18.

It is recommended that a health economic analysis that uses data from an adaptive trial considers both an unadjusted analysis used for fixed design trials and adjusted analysis that accounts for the adaptive nature of the trial when estimating cost-effectiveness. This will prevent or lessen the unintentional introduction of statistical bias that could compromise healthcare decision-making and potentially prevent patients receiving the treatment they need as resources are being wasted on research and treatments that are not cost-effective.

In context: Taken from 18, Figure 2 shows how the bias in the point estimates calculated using data from a group sequential design increases as the correlation between the primary outcome and within trial health economic outcomes increases (costs, QALYs and incremental net benefit (INB)). The magnitude of the bias is affected by both the stopping rule for the group sequential design and the number of analyses conducted during the trial (two or five). The bias adjusted maximum likelihood estimate is extended to the health economic outcomes to provide an adjusted analysis. This reduces the bias in the point estimates helping to provide a more accurate within trial cost-effectiveness analysis even when a group sequential design is used. The problem illustrated here also arises with other types of adaptive designs as well such as those involve treatment or population selection (multi-arm multi-stage, MAMS and adaptive population enrichment, APE).

3) Practical implementation

To successfully implement a clinical trial using an adaptive design with a health economic analysis, research teams should consider:

- whether it is appropriate to have a health economist on the data monitoring and ethics committee (DMEC). The inclusion of a health economist on the DMEC is important when a health economic analysis is used as part of the design, monitoring, and analysis of adaptive trials. The trial statistician and health economist should work together to develop reports presented to the DMEC in an understandable and interpretable way.

- the trial health economist should regularly attend trial management group (TMG) meetings to stay up to date with the progress of the trial, share expertise relating to the role of health economics in the design and raise practical questions as they arise during the study.

- how the trial health economist and statistician can work together to facilitate the implementation of health economics in adaptive trials by sharing expertise. This may require additional discussions outside of the routine TMG meetings, however, will avoid any duplication of effort when planning, conducting, and reporting analyses. This is important when interim analyses are carried out in a short time frame.